使用Python评估RAG管道响应

简介

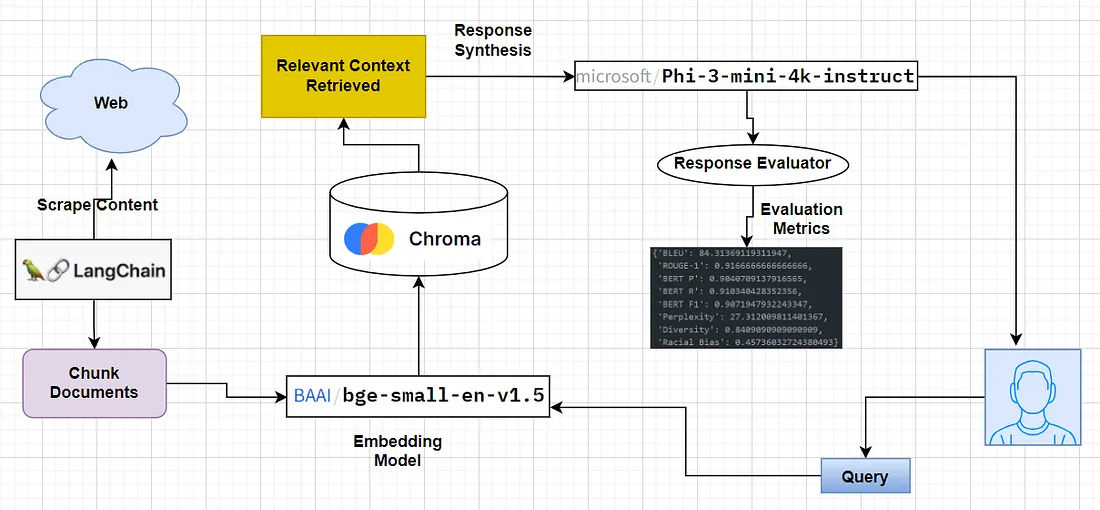

检索增强生成是一种用相关数据丰富 LLM 提示的方法。通常情况下,用户提示会被转换为嵌入,并从向量存储中获取匹配文档。然后,将匹配文档作为提示的一部分调用 LLM。

RAG 评估是对我们的管道性能进行评估。RAG 评估通过分析我们系统产生的最高结果来衡量检索短语中产生的精确度、召回率和对事实的忠实度。这样,我们就能自动跟踪和监控管道的性能。在为 RAG 应用程序设计评估策略时,应该同时评估这两个步骤:

- 从向量存储中检索文档

- LLM 输出生成

将这些步骤分开进行评估非常重要,因为将 RAG 分成多个步骤更容易找出问题所在。评估 RAG 应用程序有几个标准:

基于输出

- 事实性(也称为正确性): 衡量 LLM 输出是否基于所提供的基本事实。

- 答案相关性: 衡量答案对问题的直接回答程度。

基于上下文

- 上下文一致性(也称为 “基础 ”或 “忠实性”): 衡量 LLM 输出是否基于所提供的上下文。

- 上下文回忆: 衡量上下文与所提供的基本事实相比是否包含正确的信息,以便生成答案。

- 上下文相关性: 衡量有多少上下文是回答给定查询所必需的。

- 自定义指标: 你比任何人都更了解自己的应用程序。创建测试用例,重点关注与你相关的事项(例如:是否引用了某个文档、响应是否过长等)。

在此,我们将根据从向量存储中检索的上下文和 LLM 生成的响应来评估 RAG 管道。我们将根据以下指标对 RAG 管道进行评估:

* BLEU(双语评估)得分:

BLEU 分数是机器翻译任务中广泛使用的指标,其目标是将文本从一种语言自动翻译成另一种语言。它是通过将机器生成的译文与人类译者提供的一组参考译文进行比较来评估译文质量的一种方法。

* ROUGE(以回忆为导向的要点评估替补)评分:

ROUGE 分数是一组常用于文本摘要任务的指标,其目标是自动生成较长文本的简明摘要。ROUGE 的设计目的是通过将机器生成的摘要与人类提供的参考摘要进行比较,从而评估机器生成摘要的质量。

* BERT 分数

BERTScore 是用于评估文本生成模型(例如机器翻译或摘要)质量的指标。它利用预先训练的 BERT 上下文嵌入来处理生成的文本和参考文本,然后计算这些嵌入之间的余弦相似度。本主题介绍了 BERTScore 指标背后的核心概念。

* 困惑

困惑度是自然语言处理和机器学习中用来评估语言模型性能的指标。它衡量模型根据前一个单词或字符提供的上下文预测下一个单词或字符的能力。困惑度分数越低,模型预测下一个单词或字符的能力就越好。

* 多样性

多样性衡量生成的输出中二元组的独特性。值越高,输出越多样化、越多样化。

* 种族偏见

自然语言处理 (NLP) 中的种族偏见是基于种族而对人们进行不公平对待。它可以以多种方式发生,包括:

- 语言模型:语言模型可能会产生有偏见的语言,并且难以处理训练数据中没有很好体现的方言和口音。这可能会导致歧视并强化种族刻板印象。

- 数据:NLP 系统可以反映用于训练它们的语言数据中的偏见。

- 注释:注释者群体和数据之间的不匹配可能会引入偏差。

- 研究设计:研究设计也可能引入偏见

代码实现

安装所需依赖项

!pip install sacrebleu

!pip install rouge-score

!pip install bert-score

!pip install transformers

!pip install nltk

!pip install textblob

!pip install -qU dataset

!pip install -qU langchain

!pip install -qu langchain_community

!pip install -qU chromadb

!pip install -qU langchain-chroma

!pip install -qU langchain-huggingface

!pip install -qU sentence-transformers

!pip install -qU Flashrank

!pip install langchain_community

使用 Chroma 和 Phi-3-mini-4k-instruct 构建 RAG 管线

实例化 LLM

from langchain_huggingface import HuggingFacePipeline

llm = HuggingFacePipeline.from_model_id(

model_id="microsoft/Phi-3-mini-4k-instruct",

task="text-generation",

pipeline_kwargs={

"max_new_tokens": 100,

"top_k": 50,

"temperature": 0.1,

},

)

实例化嵌入模型

from langchain.embeddings import HuggingFaceEmbeddings

model_name ="BAAI/bge-small-en-v1.5"

model_kwargs ={"device":"cuda"}

encode_kwargs ={"normalize_embeddings":False}

embeddings = HuggingFaceEmbeddings(model_name=model_name,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs)

下载数据

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader(

"https://blog.langchain.dev/langchain-v0-1-0/"

)

documents = loader.load()

print(len(documents))

分块文件

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 1250,

chunk_overlap = 100,

length_function = len,

is_separator_regex = False

)

#

split_docs = text_splitter.split_documents(documents)

print(len(split_docs))

设置矢量存储

from langchain_community.vectorstores import Chroma

vectorstore = Chroma(embedding_function=embeddings,

persist_directory="./chromadb1",

collection_name="full_documents")

添加文件块

vectorstore.add_documents(split_docs)

vectorstore.persist()

使用 FlashRank 进行高级检索

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import FlashrankRerank

#

retriever = vectorstore.as_retriever(search_kwargs={"k":10})

#

compressor = FlashrankRerank()

compression_retriever = ContextualCompressionRetriever(base_compressor=compressor,

base_retriever=retriever

)

建立检索质量保证链

from langchain.chains import RetrievalQA

qa = RetrievalQA.from_chain_type(llm=llm,

chain_type="stuff",

retriever=compression_retriever,

return_source_documents=True

)

提出问题

result = qa.invoke({"query":"What is mentioned about Composability?"})"query":"What is mentioned about Composability?"})

print(result)

#########################RESPONSE##############################

{'query': 'What is mentioned about Composability?',

'result': "Use the following pieces of context to answer the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.\n\nchanges. These can now be reflected on an individual integration basis with proper versioning in the standalone integration package.ObservabilityBuilding LLM applications involves putting a non-deterministic component at the center of your system. These models can often output unexpected results, so having visibility into exactly what is happening in your system is integral. 💡We want to make langchain as observable and as debuggable as possible, whether through architectural decisions or tools we build on the side.We’ve set about this in a few ways.The main way we’ve tackled this is by building LangSmith. One of the main value props that LangSmith provides is a best-in-class debugging experience for your LLM application. We log exactly what steps are happening, what the inputs of each step are, what the outputs of each step are, how long each step takes, and more data. We display this in a user-friendly way, allowing you to identify which steps are taking the longest, enter a playground to debug unexpected LLM responses, track token usage and more. Even in private beta, the demand for LangSmith has been overwhelming, and we’re investing a lot in scalability so that we can release a public beta and then make it generally available\n\na lot in scalability so that we can release a public beta and then make it generally available in the coming months. We are also already supporting an enterprise version, which comes with a within-VPC deployment for enterprises with strict data privacy policies.We’ve also tackled observability in other ways. We’ve long had built in verbose and debug modes for different levels of logging throughout the pipeline. We recently introduced methods to visualize the chain you created, as well as get all prompts used.ComposabilityWhile it’s helpful to have prebuilt chains to get started, we very often see teams breaking outside of those architectures and wanting to customize their chain - not only customize the prompt, but also customize different parts of the orchestration. 💡Over the past few months, we’ve invested heavily in LangChain Expression Language (LCEL). This enables composition of arbitrary sequences, providing a lot of the same benefits as data orchestration tools do for data engineering pipelines (batching, parallelization, fallbacks). It also provides some benefits unique to LLM workloads - mainly LLM-specific observability (covered above), and streaming, covered later in this post.The components for LCEL are in\n\nbug fixes. See more towards the end of this post on our plans for that.While re-architecting the package towards a path to a stable 0.1 release, we took the opportunity to talk to hundreds of developers about why they use LangChain and what they love about it. This input guided our direction and focus. We also used it as an opportunity to bring parity to the Python and JavaScript versions in the core areas outlined below. 💡While certain integrations and more tangential chains may be language specific, core abstractions and key functionality are implemented equally in both the Python and JavaScript packages.We want to share what we’ve heard and our plan to continually improve LangChain. We hope that sharing these learnings will increase transparency into our thinking and decisions, allowing others to better use, understand, and contribute to LangChain. After all, a huge part of LangChain is our community – both the user base and the 2000+ contributors – and we want everyone to come along for the journey.\xa0Third Party IntegrationsOne of the things that people most love about LangChain is how easy we make it to get started building on any stack. We have almost 700 integrations, ranging from LLMs to vector stores to tools for agents\n\nQuestion: What is mentioned about Composability?\nHelpful Answer:\nComposability: While it’s helpful to have prebuilt chains to get started, we very often see teams breaking outside of those architectures and wanting to customize their chain - not only customize the prompt, but also customize different parts of the orchestration. 💡Over the past few months, we’ve invested heavily in LangChain Expression Language (LCEL). This enables composition of arbitrary sequences, providing a lot of the same benefits as",

'source_documents': [

Document(page_content='changes. These can now be reflected on an individual integration basis with proper versioning in the standalone integration package.ObservabilityBuilding LLM applications involves putting a non-deterministic component at the center of your system. These models can often output unexpected results, so having visibility into exactly what is happening in your system is integral. 💡We want to make langchain as observable and as debuggable as possible, whether through architectural decisions or tools we build on the side.We’ve set about this in a few ways.The main way we’ve tackled this is by building LangSmith. One of the main value props that LangSmith provides is a best-in-class debugging experience for your LLM application. We log exactly what steps are happening, what the inputs of each step are, what the outputs of each step are, how long each step takes, and more data. We display this in a user-friendly way, allowing you to identify which steps are taking the longest, enter a playground to debug unexpected LLM responses, track token usage and more. Even in private beta, the demand for LangSmith has been overwhelming, and we’re investing a lot in scalability so that we can release a public beta and then make it generally available', metadata={'language': 'en', 'source': 'https://blog.langchain.dev/langchain-v0-1-0/', 'title': 'LangChain v0.1.0', 'relevance_score': 0.9986438}),

Document(page_content='a lot in scalability so that we can release a public beta and then make it generally available in the coming months. We are also already supporting an enterprise version, which comes with a within-VPC deployment for enterprises with strict data privacy policies.We’ve also tackled observability in other ways. We’ve long had built in verbose and debug modes for different levels of logging throughout the pipeline. We recently introduced methods to visualize the chain you created, as well as get all prompts used.ComposabilityWhile it’s helpful to have prebuilt chains to get started, we very often see teams breaking outside of those architectures and wanting to customize their chain - not only customize the prompt, but also customize different parts of the orchestration. 💡Over the past few months, we’ve invested heavily in LangChain Expression Language (LCEL). This enables composition of arbitrary sequences, providing a lot of the same benefits as data orchestration tools do for data engineering pipelines (batching, parallelization, fallbacks). It also provides some benefits unique to LLM workloads - mainly LLM-specific observability (covered above), and streaming, covered later in this post.The components for LCEL are in', metadata={'language': 'en', 'source': 'https://blog.langchain.dev/langchain-v0-1-0/', 'title': 'LangChain v0.1.0', 'relevance_score': 0.9979234}),

Document(page_content='bug fixes. See more towards the end of this post on our plans for that.While re-architecting the package towards a path to a stable 0.1 release, we took the opportunity to talk to hundreds of developers about why they use LangChain and what they love about it. This input guided our direction and focus. We also used it as an opportunity to bring parity to the Python and JavaScript versions in the core areas outlined below. 💡While certain integrations and more tangential chains may be language specific, core abstractions and key functionality are implemented equally in both the Python and JavaScript packages.We want to share what we’ve heard and our plan to continually improve LangChain. We hope that sharing these learnings will increase transparency into our thinking and decisions, allowing others to better use, understand, and contribute to LangChain. After all, a huge part of LangChain is our community – both the user base and the 2000+ contributors – and we want everyone to come along for the journey.\xa0Third Party IntegrationsOne of the things that people most love about LangChain is how easy we make it to get started building on any stack. We have almost 700 integrations, ranging from LLMs to vector stores to tools for agents', metadata={'language': 'en', 'source': 'https://blog.langchain.dev/langchain-v0-1-0/', 'title': 'LangChain v0.1.0', 'relevance_score': 0.99791926})

]

}

评估 RAG 管道生成的响应的代码

评估

answer = result['result']'result']

context = " ".join([d.page_content for d in result['source_documents']])

import torch

from sacrebleu import corpus_bleu

from rouge_score import rouge_scorer

from bert_score import score

from transformers import GPT2LMHeadModel, GPT2Tokenizer, pipeline

import nltk

from nltk.util import ngrams

class RAGEvaluator:

def __init__(self):

self.gpt2_model, self.gpt2_tokenizer = self.load_gpt2_model()

self.bias_pipeline = pipeline("zero-shot-classification", model="Hate-speech-CNERG/dehatebert-mono-english")

def load_gpt2_model(self):

model = GPT2LMHeadModel.from_pretrained('gpt2')

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

return model, tokenizer

def evaluate_bleu_rouge(self, candidates, references):

bleu_score = corpus_bleu(candidates, [references]).score

scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True)

rouge_scores = [scorer.score(ref, cand) for ref, cand in zip(references, candidates)]

rouge1 = sum([score['rouge1'].fmeasure for score in rouge_scores]) / len(rouge_scores)

return bleu_score, rouge1

def evaluate_bert_score(self, candidates, references):

P, R, F1 = score(candidates, references, lang="en", model_type='bert-base-multilingual-cased')

return P.mean().item(), R.mean().item(), F1.mean().item()

def evaluate_perplexity(self, text):

encodings = self.gpt2_tokenizer(text, return_tensors='pt')

max_length = self.gpt2_model.config.n_positions

stride = 512

lls = []

for i in range(0, encodings.input_ids.size(1), stride):

begin_loc = max(i + stride - max_length, 0)

end_loc = min(i + stride, encodings.input_ids.size(1))

trg_len = end_loc - i

input_ids = encodings.input_ids[:, begin_loc:end_loc]

target_ids = input_ids.clone()

target_ids[:, :-trg_len] = -100

with torch.no_grad():

outputs = self.gpt2_model(input_ids, labels=target_ids)

log_likelihood = outputs[0] * trg_len

lls.append(log_likelihood)

ppl = torch.exp(torch.stack(lls).sum() / end_loc)

return ppl.item()

def evaluate_diversity(self, texts):

all_tokens = [tok for text in texts for tok in text.split()]

unique_bigrams = set(ngrams(all_tokens, 2))

diversity_score = len(unique_bigrams) / len(all_tokens) if all_tokens else 0

return diversity_score

def evaluate_racial_bias(self, text):

results = self.bias_pipeline([text], candidate_labels=["hate speech", "not hate speech"])

bias_score = results[0]['scores'][results[0]['labels'].index('hate speech')]

return bias_score

def evaluate_all(self, question, response, reference):

candidates = [response]

references = [reference]

bleu, rouge1 = self.evaluate_bleu_rouge(candidates, references)

bert_p, bert_r, bert_f1 = self.evaluate_bert_score(candidates, references)

perplexity = self.evaluate_perplexity(response)

diversity = self.evaluate_diversity(candidates)

racial_bias = self.evaluate_racial_bias(response)

return {

"BLEU": bleu,

"ROUGE-1": rouge1,

"BERT P": bert_p,

"BERT R": bert_r,

"BERT F1": bert_f1,

"Perplexity": perplexity,

"Diversity": diversity,

"Racial Bias": racial_bias

}

实例化评估器

evaluator = RAGEvaluator()

question = "What is mentioned about Composability?""What is mentioned about Composability?"

response = answer

reference = context

metrics = evaluator.evaluate_all(question, response, reference)

生成的度量响应的格式

or k,v in metrics.items():

if k == 'BLEU':

print(f"BLEU measures the overlap between the generated output and reference text based on n-grams. Higher scores indicate better match.score obtained :{v}")

elif k == "ROUGE-1":

print(f"ROUGE-1 measures the overlap of unigrams between the generated output and reference text. Higher scores indicate better match.Score obtained:{v}")

elif k == 'BERT P':

print(f"BERTScore evaluates the semantic similarity between the generated output and reference text using BERT embeddings.")

print(f"\n\n**BERT Precision**: {metrics['BERT P']}")

print(f"**BERT Recall**: {metrics['BERT R']}")

print(f"**BERT F1 Score**: {metrics['BERT F1']}")

elif k == 'Perplexity':

print(f"Perplexity measures how well a language model predicts the text. Lower values indicate better fluency and coherence. score obtained :{v}")

elif k == 'Diversity':

print(f"Diversity measures the uniqueness of bigrams in the generated output. Higher values indicate more diverse and varied output.score obtained:{v}")

elif k == 'Racial Bias':

print(f"Racial Bias score indicates the presence of biased language in the generated output. Higher scores indicate more bias.score obtained:{v}")

* BLEU measures the overlap between the generated output and reference text based on n-grams. Higher scores indicate better match.score obtained :84.31369119311947

* ROUGE-1 measures the overlap of unigrams between the generated output and reference text. Higher scores indicate better match.Score obtained:0.9166666666666666

* BERTScore evaluates the semantic similarity between the generated output and reference text using BERT embeddings.

**BERT Precision**:0.9040709137916565

**BERT Recall**:0.910340428352356

**BERT F1 Score**:0.9071947932243347

* Perplexity measures how well a language model predicts the text. Lower values indicate better fluency and coherence. score obtained :27.312009811401367

* Diversity measures the uniqueness of bigrams in the generated output. Higher values indicate more diverse and varied output.score obtained:0.8409090909090909

* Racial Bias score indicates the presence of biased language in the generated output. Higher scores indicate more bias.score obtained:

结论

在此,我们使用 Python 构建了一个使用基本语言评估指标的 RAG Response 评估器。此外,尽管使用了相同的评估指标,但该解决方案独立于任何可用的评估工具。