【指南】RAG的语义分块

什么是分块?

为了遵守 LLM 的语境窗口,我们通常会将文本分成较小的部分/片段,这就是所谓的分块。

什么是 RAG?

尽管 LLM 能够生成既有意义又语法正确的文本,但这些 LLM 存在一个问题,即幻觉。LLM 中的 "幻觉 "是指 LLM 能够自信地生成错误答案,即它们编造出错误的答案,让我们相信它是真的。这是自引入 LLM以来的一个主要问题。这些幻觉导致了错误的、与事实不符的答案。因此,我们引入了 "检索增强生成"(Retrieval Augmented Generation)技术。

在 RAG 中,我们获取文档列表/文档块,并将这些文本文档编码为称为向量嵌入的数字表示法,其中单个向量嵌入表示单个文档块,并将它们存储在称为向量存储的数据库中。将这些块编码成嵌入式所需的模型称为编码模型或双编码器。这些编码器是在大量的数据语料库中训练出来的,因此功能强大,足以将文档块编码成单一的向量嵌入表示。

检索在很大程度上取决于如何在向量存储中体现和存储块。一般来说,为任何给定文本找到合适的块大小是一个非常困难的问题。

改进检索可以通过各种检索方法来实现。但也可以通过更好的分块策略来实现。

不同的分块方法

- 固定大小的分块

- 递归分块

- 特定文档分块

- 语义分块

- 代理分块

固定大小的分块: 这是最常见、最直接的分块方法:我们只需决定分块中标记的数量,并可选择它们之间是否有重叠。一般来说,我们希望在语块之间保留一些重叠,以确保语义上下文不会在语块之间丢失。在大多数情况下,固定大小的分块是最佳选择。与其他形式的分块法相比,固定大小的分块法计算成本低,使用简单,因为它不需要使用任何 NLP 库。

递归分块法:递归分块法使用一组分隔符,以分层和迭代的方式将输入文本分成较小的块。如果初次尝试分割文本时没有产生所需的大小或结构的分块,该方法就会使用不同的分隔符或标准对产生的分块进行递归调用,直到达到所需的分块大小或结构。这就意味着,虽然各文本块的大小不会完全相同,但它们仍会 "希望 "大小相似。充分利用了固定大小分块和重叠的优点。

文件特定分块: 它考虑了文档的结构。它不使用固定的字符数或递归过程,而是创建与文档逻辑部分(如段落或子部分)相一致的分块。这样做可以保持作者对内容的组织,从而保持文本的连贯性。它能使检索到的信息更相关、更有用,尤其是对于章节定义明确的结构化文档。它可以处理 Markdown、Html 等格式。

语义分块: 语义分块考虑了文本内部的关系。它将文本划分为有意义的、语义完整的块。这种方法可确保信息在检索过程中的完整性,从而获得更准确、更符合上下文的结果。与前一种分块策略相比,它的速度较慢

代理分块: 这里的假设是以人类的方式处理文档。

- 我们从文档顶部开始,将第一部分视为一个分块。

- 我们继续向下处理文档,决定新的句子或信息是属于第一个语块还是应该开始一个新的语块。

- 我们一直这样做,直到文档结束。

由于处理多个 LLM 调用所需的时间以及这些调用的成本,这种方法仍在测试阶段,还不能完全投入使用。目前公共图书馆还没有实现这种方法。

在此,我们将尝试使用语义分块法和递归检索法。

方法比较步骤

- 加载文档

- 使用以下两种方法对文档进行分块: 语义分块和递归检索(Recursive Retriever)。

- 使用RAGAS评估质量和数量方面的改进

语义分块

语义分块法包括提取文档中每个句子的嵌入,比较所有句子之间的相似度,然后将嵌入最相似的句子分组在一起。

通过关注文本的含义和上下文,语义分块技术大大提高了检索质量。在保持文本语义完整性至关重要的情况下,语义分块法是最佳选择。

使用的技术栈

- Langchain :LangChain 是一个开源框架,旨在简化使用大型语言模型 (LLM) 创建应用程序的过程。它为链提供了一个标准接口,与其他工具进行了大量集成,并为常见应用提供了端到端的链。

- LLM:Groq的语言处理单元(LPU)是一项尖端技术,旨在大幅提升人工智能计算性能,尤其是大型语言模型(LLM)的计算性能。Groq LPU 系统的主要目标是提供实时、低延迟、推理性能卓越的体验。

- 嵌入模型: FastEmbed 是一个轻量级、快速的 Python 库,用于嵌入生成。

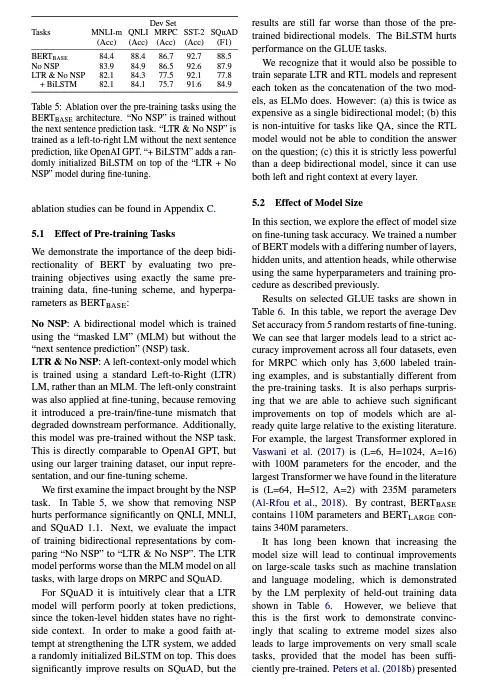

- 评估: Ragas 提供量身定制的指标,用于单独评估 RAG 管道的每个组件。

代码执行

安装所需的依赖项

!pip install -qU langchain_experimental langchain_openai langchain_community langchain ragas chromadb langchain-groq fastembed pypdf openai

langchain==0.1.16

langchain-community==0.0.34

langchain-core==0.1.45

langchain-experimental==0.0.57

langchain-groq==0.1.2

langchain-openai==0.1.3

langchain-text-splitters==0.0.1

langcodes==3.3.0

langsmith==0.1.49

chromadb==0.4.24

ragas==0.1.7

fastembed==0.2.6

下载数据

! wget "https://arxiv.org/pdf/1810.04805.pdf""https://arxiv.org/pdf/1810.04805.pdf"

处理 PDF 内容

from langchain.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

#

loader = PyPDFLoader("1810.04805.pdf")

documents = loader.load()

#

print(len(documents))

执行本地分块(递归字符文本分割)

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=0,

length_function=len,

is_separator_regex=False

)

#

naive_chunks = text_splitter.split_documents(documents)

for chunk in naive_chunks[10:15]:

print(chunk.page_content+ "\n")

###########################RESPONSE###############################

BERT BERT

E[CLS] E1 E[SEP] ... ENE1’... EM’

C

T1

T[SEP] ...

TN

T1’...

TM’

[CLS] Tok 1 [SEP] ... Tok NTok 1 ... TokM

Question Paragraph Start/End Span

BERT

E[CLS] E1 E[SEP] ... ENE1’... EM’

C

T1

T[SEP] ...

TN

T1’...

TM’

[CLS] Tok 1 [SEP] ... Tok NTok 1 ... TokM

Masked Sentence A Masked Sentence B

Pre-training Fine-Tuning NSP Mask LM Mask LM

Unlabeled Sentence A and B Pair SQuAD

Question Answer Pair NER MNLI Figure 1: Overall pre-training and fine-tuning procedures for BERT. Apart from output layers, the same architec-

tures are used in both pre-training and fine-tuning. The same pre-trained model parameters are used to initialize

models for different down-stream tasks. During fine-tuning, all parameters are fine-tuned. [CLS] is a special

symbol added in front of every input example, and [SEP] is a special separator token (e.g. separating ques-

tions/answers).

ing and auto-encoder objectives have been used

for pre-training such models (Howard and Ruder,

2018; Radford et al., 2018; Dai and Le, 2015).

2.3 Transfer Learning from Supervised Data

There has also been work showing effective trans-

fer from supervised tasks with large datasets, such

as natural language inference (Conneau et al.,

2017) and machine translation (McCann et al.,

2017). Computer vision research has also demon-

strated the importance of transfer learning from

large pre-trained models, where an effective recipe

is to fine-tune models pre-trained with Ima-

geNet (Deng et al., 2009; Yosinski et al., 2014).

3 BERT

We introduce BERT and its detailed implementa-

tion in this section. There are two steps in our

framework: pre-training and fine-tuning . Dur-

ing pre-training, the model is trained on unlabeled

data over different pre-training tasks. For fine-

tuning, the BERT model is first initialized with

the pre-trained parameters, and all of the param-

eters are fine-tuned using labeled data from the

downstream tasks. Each downstream task has sep-

arate fine-tuned models, even though they are ini-

tialized with the same pre-trained parameters. The

question-answering example in Figure 1 will serve

as a running example for this section.

A distinctive feature of BERT is its unified ar-

chitecture across different tasks. There is mini-mal difference between the pre-trained architec-

ture and the final downstream architecture.

Model Architecture BERT’s model architec-

ture is a multi-layer bidirectional Transformer en-

coder based on the original implementation de-

scribed in Vaswani et al. (2017) and released in

thetensor2tensor library.1Because the use

of Transformers has become common and our im-

plementation is almost identical to the original,

we will omit an exhaustive background descrip-

tion of the model architecture and refer readers to

Vaswani et al. (2017) as well as excellent guides

such as “The Annotated Transformer.”2

In this work, we denote the number of layers

(i.e., Transformer blocks) as L, the hidden size as

H, and the number of self-attention heads as A.3

We primarily report results on two model sizes:

BERT BASE (L=12, H=768, A=12, Total Param-

eters=110M) and BERT LARGE (L=24, H=1024,

A=16, Total Parameters=340M).

BERT BASE was chosen to have the same model

size as OpenAI GPT for comparison purposes.

Critically, however, the BERT Transformer uses

bidirectional self-attention, while the GPT Trans-

former uses constrained self-attention where every

token can only attend to context to its left.4

1https://github.com/tensorflow/tensor2tensor

2http://nlp.seas.harvard.edu/2018/04/03/attention.html

3In all cases we set the feed-forward/filter size to be 4H,

i.e., 3072 for the H= 768 and 4096 for the H= 1024 .

4We note that in the literature the bidirectional Trans-

Input/Output Representations To make BERT

handle a variety of down-stream tasks, our input

representation is able to unambiguously represent

both a single sentence and a pair of sentences

(e.g.,⟨Question, Answer⟩) in one token sequence.

Throughout this work, a “sentence” can be an arbi-

trary span of contiguous text, rather than an actual

linguistic sentence. A “sequence” refers to the in-

put token sequence to BERT, which may be a sin-

gle sentence or two sentences packed together.

We use WordPiece embeddings (Wu et al.,

2016) with a 30,000 token vocabulary. The first

token of every sequence is always a special clas-

sification token ( [CLS] ). The final hidden state

corresponding to this token is used as the ag-

gregate sequence representation for classification

tasks. Sentence pairs are packed together into a

single sequence. We differentiate the sentences in

two ways. First, we separate them with a special

token ( [SEP] ). Second, we add a learned embed-

实例化嵌入模型

from langchain_community.embeddings.fastembed import FastEmbedEmbeddings

embed_model = FastEmbedEmbeddings(model_name="BAAI/bge-base-en-v1.5")

为 LLM 设置 API 密钥

from google.colab import userdata

from groq import Groq

from langchain_groq import ChatGroq

#

groq_api_key = userdata.get("GROQ_API_KEY")

执行语义分块

我们今天将使用 "percentile "阈值作为示例--但在语义分块(Semantic Chunking)方面,你可以使用三种不同的策略:

- percentile"(默认)--在这种方法中,将计算句子之间的所有差异,然后将大于X百分位数的任何差异进行拆分。

- `standard_deviation` - 在这种方法中,任何大于 X 个标准差的差异都会被拆分。

- `interquartile` - 在这种方法中,使用四分位距来分割句块。

from langchain_experimental.text_splitter import SemanticChunker

from langchain_openai.embeddings import OpenAIEmbeddings

semantic_chunker = SemanticChunker(embed_model, breakpoint_threshold_type="percentile")

#

semantic_chunks = semantic_chunker.create_documents([d.page_content for d in documents])

#

for semantic_chunk in semantic_chunks:

if "Effect of Pre-training Tasks" in semantic_chunk.page_content:

print(semantic_chunk.page_content)

print(len(semantic_chunk.page_content))

#############################RESPONSE###############################

Dev Set

Tasks MNLI-m QNLI MRPC SST-2 SQuAD

(Acc) (Acc) (Acc) (Acc) (F1)

BERT BASE 84.4 88.4 86.7 92.7 88.5

No NSP 83.9 84.9 86.5 92.6 87.9

LTR & No NSP 82.1 84.3 77.5 92.1 77.8

+ BiLSTM 82.1 84.1 75.7 91.6 84.9

Table 5: Ablation over the pre-training tasks using the

BERT BASE architecture. “No NSP” is trained without

the next sentence prediction task. “LTR & No NSP” is

trained as a left-to-right LM without the next sentence

prediction, like OpenAI GPT. “+ BiLSTM” adds a ran-

domly initialized BiLSTM on top of the “LTR + No

NSP” model during fine-tuning. ablation studies can be found in Appendix C. 5.1 Effect of Pre-training Tasks

We demonstrate the importance of the deep bidi-

rectionality of BERT by evaluating two pre-

training objectives using exactly the same pre-

training data, fine-tuning scheme, and hyperpa-

rameters as BERT BASE :

No NSP : A bidirectional model which is trained

using the “masked LM” (MLM) but without the

“next sentence prediction” (NSP) task. LTR & No NSP : A left-context-only model which

is trained using a standard Left-to-Right (LTR)

LM,

实例化矢量存储

from langchain_community.vectorstores import Chroma

semantic_chunk_vectorstore = Chroma.from_documents(semantic_chunks, embedding=embed_model)

我们将把语义检索器 "限制 "为 k = 1,以展示语义分块策略的威力,同时保持语义和天真检索上下文之间相似的标记数。

实例化检索步骤

semantic_chunk_retriever = semantic_chunk_vectorstore.as_retriever(search_kwargs={"k" : 1})"k" : 1})

semantic_chunk_retriever.invoke("Describe the Feature-based Approach with BERT?")

########################RESPONSE###################################

[Document(page_content='The right part of the paper represents the\nDev set results. For the feature-based approach,\nwe concatenate the last 4 layers of BERT as the\nfeatures, which was shown to be the best approach\nin Section 5.3. From the table it can be seen that fine-tuning is\nsurprisingly robust to different masking strategies. However, as expected, using only the M ASK strat-\negy was problematic when applying the feature-\nbased approach to NER. Interestingly, using only\nthe R NDstrategy performs much worse than our\nstrategy as well.')]实例化增强步骤(用于内容增强)

from langchain_core.prompts import ChatPromptTemplate

rag_template = """\

Use the following context to answer the user's query. If you cannot answer, please respond with 'I don't know'.

User's Query:

{question}

Context:

{context}

"""

rag_prompt = ChatPromptTemplate.from_template(rag_template)

实例化生成步骤

chat_model = ChatGroq(temperature=0,0,

model_name="mixtral-8x7b-32768",

api_key=userdata.get("GROQ_API_KEY"),)

利用语义分块创建 RAG 管道

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

semantic_rag_chain = (

{"context" : semantic_chunk_retriever, "question" : RunnablePassthrough()}

| rag_prompt

| chat_model

| StrOutputParser()

)

问题1:

semantic_rag_chain.invoke("Describe the Feature-based Approach with BERT?")"Describe the Feature-based Approach with BERT?")

################ RESPONSE ###################################

The feature-based approach with BERT, as mentioned in the context, involves using BERT as a feature extractor for a downstream natural language processing task, specifically Named Entity Recognition (NER) in this case.

To use BERT in a feature-based approach, the last 4 layers of BERT are concatenated to serve as the features for the task. This was found to be the most effective approach in Section 5.3 of the paper.

The context also mentions that fine-tuning BERT is surprisingly robust to different masking strategies. However, when using the feature-based approach for NER, using only the MASK strategy was problematic. Additionally, using only the RND strategy performed much worse than the proposed strategy.

In summary, the feature-based approach with BERT involves using the last 4 layers of BERT as features for a downstream NLP task, and fine-tuning these features for the specific task. The approach was found to be robust to different masking strategies, but using only certain strategies was problematic for NER.问题2

semantic_rag_chain.invoke("What is SQuADv2.0?")"What is SQuADv2.0?")

################ RESPONSE ###################################

SQuAD v2.0, or Squad Two Point Zero, is a version of the Stanford Question Answering Dataset (SQuAD) that extends the problem definition of SQuAD 1.1 by allowing for the possibility that no short answer exists in the provided paragraph. This makes the problem more realistic, as not all questions have a straightforward answer within the provided text. The SQuAD 2.0 task uses a simple approach to extend the SQuAD 1.1 BERT model for this task, by treating questions that do not have an answer as having an answer span with start and end at the [CLS] token, and comparing the score of the no-answer span to the score of the best non-null span for prediction. The document also mentions that the BERT ensemble, which is a combination of 7 different systems using different pre-training checkpoints and fine-tuning seeds, outperforms all existing systems by a wide margin in SQuAD 2.0, even when excluding entries that use BERT as one of their components.问题3

semantic_rag_chain.invoke("What is the purpose of Ablation Studies?")"What is the purpose of Ablation Studies?")

################ RESPONSE ###################################

Ablation studies are used to understand the impact of different components or settings of a machine learning model on its performance. In the provided context, ablation studies are used to answer questions about the effect of the number of training steps and masking procedures on the performance of the BERT model. By comparing the performance of the model under different conditions, researchers can gain insights into the importance of these components or settings and how they contribute to the overall performance of the model.使用 Naive Chunking 策略实施 RAG 流水线

naive_chunk_vectorstore = Chroma.from_documents(naive_chunks, embedding=embed_model)

naive_chunk_retriever = naive_chunk_vectorstore.as_retriever(search_kwargs={"k" : 5})"k" : 5})

naive_rag_chain = (

{"context" : naive_chunk_retriever, "question" : RunnablePassthrough()}

| rag_prompt

| chat_model

| StrOutputParser()

)

问题1

naive_rag_chain.invoke("Describe the Feature-based Approach with BERT?")"Describe the Feature-based Approach with BERT?")

#############################RESPONSE##########################

The Feature-based Approach with BERT involves extracting fixed features from the pre-trained BERT model, as opposed to the fine-tuning approach where all parameters are jointly fine-tuned on a downstream task. The feature-based approach has certain advantages, such as being applicable to tasks that cannot be easily represented by a Transformer encoder architecture, and providing major computational benefits by pre-computing an expensive representation of the training data once and then running many experiments with cheaper models on top of this representation. In the context provided, the feature-based approach is compared to the fine-tuning approach on the CoNLL-2003 Named Entity Recognition (NER) task, with the feature-based approach using a case-preserving WordPiece model and including the maximal document context provided by the data. The results presented in Table 7 show the performance of both approaches on the NER task.问题2

naive_rag_chain.invoke("What is SQuADv2.0?")"What is SQuADv2.0?")

#############################RESPONSE##########################

SQuAD v2.0, or the Stanford Question Answering Dataset version 2.0, is a collection of question/answer pairs that extends the SQuAD v1.1 problem definition by allowing for the possibility that no short answer exists in the provided paragraph. This makes the problem more realistic. The SQuAD v2.0 BERT model is extended from the SQuAD v1.1 model by treating questions that do not have an answer as having an answer span with start and end at the [CLS] token, and extending the probability space for the start and end answer span positions to include the position of the [CLS] token. For prediction, the score of the no-answer span is compared to the score of the best non-null span.问题3

naive_rag_chain.invoke("What is the purpose of Ablation Studies?")"What is the purpose of Ablation Studies?")

#############################RESPONSE##########################

Ablation studies are used to evaluate the effect of different components or settings in a machine learning model. In the provided context, ablation studies are used to understand the impact of certain aspects of the BERT model, such as the number of training steps and masking procedures, on the model's performance.

For instance, one ablation study investigates the effect of the number of training steps on BERT's performance. The results show that BERT BASE achieves higher fine-tuning accuracy on MNLI when trained for 1M steps compared to 500k steps, indicating that a larger number of training steps contributes to better performance.

Another ablation study focuses on different masking procedures during pre-training. The study compares BERT's masked language model (MLM) with a left-to-right strategy. The results demonstrate that the masking strategies aim to reduce the mismatch between pre-training and fine-tuning, as the [MASK] symbol does not appear during the fine-tuning stage. The study also reports Dev set results for both MNLI and Named Entity Recognition (NER) tasks, considering fine-tuning and feature-based approaches for NER.语义分块器的 Ragas 评估比较

使用递归字符文本分割器分割文档

synthetic_data_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,1000,

chunk_overlap=0,

length_function=len,

is_separator_regex=False

)

#

synthetic_data_chunks = synthetic_data_splitter.create_documents([d.page_content for d in documents])

print(len(synthetic_data_chunks))

创建以下数据集

- 问题 - 合成生成(grogq-mixtral-8x7b-32768)

- 语境--如上创建(合成数据块)

- 基本事实--合成生成(grogq-mixtral-8x7b-32768)

- 答案--由我们的语义 RAG 链生成

questions = []

ground_truths_semantic = []

contexts = []

answers = []

question_prompt = """\"""\

You are a teacher preparing a test. Please create a question that can be answered by referencing the following context.

Context:

{context}

"""

question_prompt = ChatPromptTemplate.from_template(question_prompt)

ground_truth_prompt = """\

Use the following context and question to answer this question using *only* the provided context.

Question:

{question}

Context:

{context}

"""

ground_truth_prompt = ChatPromptTemplate.from_template(ground_truth_prompt)

question_chain = question_prompt | chat_model | StrOutputParser()

ground_truth_chain = ground_truth_prompt | chat_model | StrOutputParser()

for chunk in synthetic_data_chunks[10:20]:

questions.append(question_chain.invoke({"context" : chunk.page_content}))

contexts.append([chunk.page_content])

ground_truths_semantic.append(ground_truth_chain.invoke({"question" : questions[-1], "context" : contexts[-1]}))

answers.append(semantic_rag_chain.invoke(questions[-1]))

将生成的内容格式化为 HuggingFace 数据集格式

from datasets import load_dataset, Dataset

qagc_list = []

for question, answer, context, ground_truth in zip(questions, answers, contexts, ground_truths_semantic):

qagc_list.append({

"question" : question,

"answer" : answer,

"contexts" : context,

"ground_truth" : ground_truth

})

eval_dataset = Dataset.from_list(qagc_list)

eval_dataset

###########################RESPONSE###########################

Dataset({

features: ['question', 'answer', 'contexts', 'ground_truth'],

num_rows: 10

})

执行 Ragas 指标并评估我们创建的数据集。

from ragas.metrics import (

answer_relevancy,

faithfulness,

context_recall,

context_precision,

)

#

from ragas import evaluate

result = evaluate(

eval_dataset,

metrics=[

context_precision,

faithfulness,

answer_relevancy,

context_recall,

],

llm=chat_model,

embeddings=embed_model,

raise_exceptions=False

)

在这里,我曾尝试使用 Groq 的开源 LLM。但出现了速率限制错误:

groq.RateLimitError: Error code: 429 - {'error': {'message': 'Rate limit reached for model `mixtral-8x7b-32768` in organization `org_01htsyxttnebyt0av6tmfn1fy6` on tokens per minute (TPM): Limit 4500, Used 3867, Requested ~1679. Please try again in 13.940333333s. Visit https://console.groq.com/docs/rate-limits for more information.', 'type': 'tokens', 'code': 'rate_limit_exceeded'}}429 - {'error': {'message': 'Rate limit reached for model `mixtral-8x7b-32768` in organization `org_01htsyxttnebyt0av6tmfn1fy6` on tokens per minute (TPM): Limit 4500, Used 3867, Requested ~1679. Please try again in 13.940333333s. Visit https://console.groq.com/docs/rate-limits for more information.', 'type': 'tokens', 'code': 'rate_limit_exceeded'}}

因此,将 LLM 重定向为使用 OpenAI,RAGAS 框架默认使用 OpenAI。

设置 OpenAI API 密钥

import os

from google.colab import userdata

import openai

os.environ['OPENAI_API_KEY'] = userdata.get('OPENAI_API_KEY')

openai.api_key = os.environ['OPENAI_API_KEY']

from ragas import evaluate

result = evaluate(

eval_dataset,

metrics=[

context_precision,

faithfulness,

answer_relevancy,

context_recall,

],

)

result

#########################RESPONSE##########################

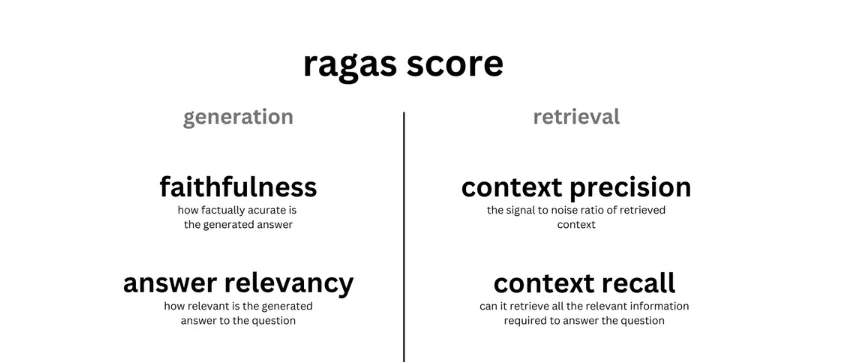

{'context_precision': 1.0000, 'faithfulness': 0.8857, 'answer_relevancy': 0.9172, 'context_recall': 1.0000}

#Extract the details into a dataframe

results_df = result.to_pandas()

results_df

针对 Naive Chunker 的 RAGAS 评估比较

import tqdm

questions = []

ground_truths_semantic = []

contexts = []

answers = []

for chunk in tqdm.tqdm(synthetic_data_chunks[10:20]):

questions.append(question_chain.invoke({"context" : chunk.page_content}))

contexts.append([chunk.page_content])

ground_truths_semantic.append(ground_truth_chain.invoke({"question" : questions[-1], "context" : contexts[-1]}))

answers.append(naive_rag_chain.invoke(questions[-1]))

制定朴素分块评估数据集

qagc_list = []

for question, answer, context, ground_truth in zip(questions, answers, contexts, ground_truths_semantic):

qagc_list.append({

"question" : question,

"answer" : answer,

"contexts" : context,

"ground_truth" : ground_truth

})

naive_eval_dataset = Dataset.from_list(qagc_list)

naive_eval_dataset

############################RESPONSE########################

Dataset({

features: ['question', 'answer', 'contexts', 'ground_truth'],

num_rows: 10

})

使用 RAGAS 框架评估我们创建的数据集

naive_result = evaluate(

naive_eval_dataset,

metrics=[

context_precision,

faithfulness,

answer_relevancy,

context_recall,

],

)

#

naive_result

############################RESPONSE#######################

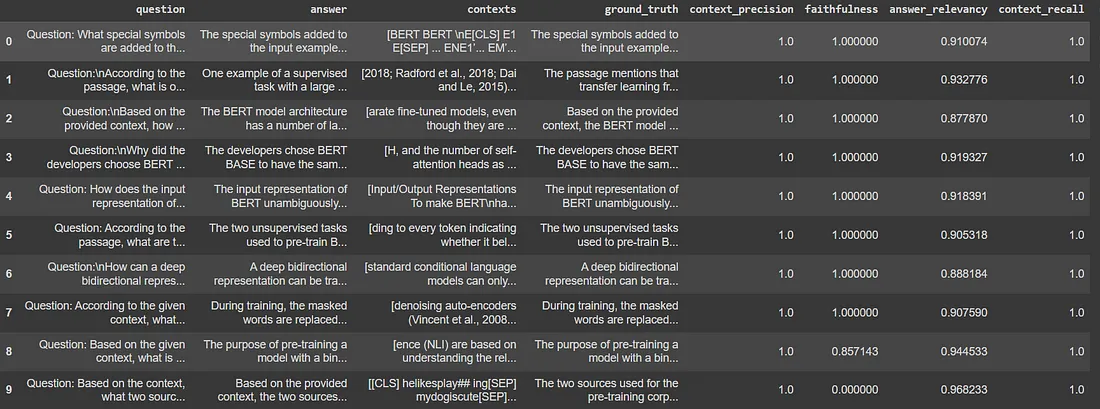

{'context_precision': 1.0000, 'faithfulness': 0.9500, 'answer_relevancy': 0.9182, 'context_recall': 1.0000}

naive_results_df = naive_result.to_pandas()

naive_results_df

###############################RESPONSE #######################

{'context_precision': 1.0000, 'faithfulness': 0.9500, 'answer_relevancy': 0.9182, 'context_recall': 1.0000}

结论

从这里我们可以看出,语义分块法和裸分块法的结果几乎是一样的,只是裸分块法对答案的事实表述更好,得分为0.95,而语义分块法的得分为0.88。

总之,语义分块可以对上下文相似的信息进行分组,从而创建独立而有意义的片段。这种方法通过为大型语言模型提供有针对性的输入,最终提高了它们理解和处理自然语言数据的能力,从而提高了大型语言模型的效率和有效性。