使用RAPTOR在Langchain中实施高级 RAG

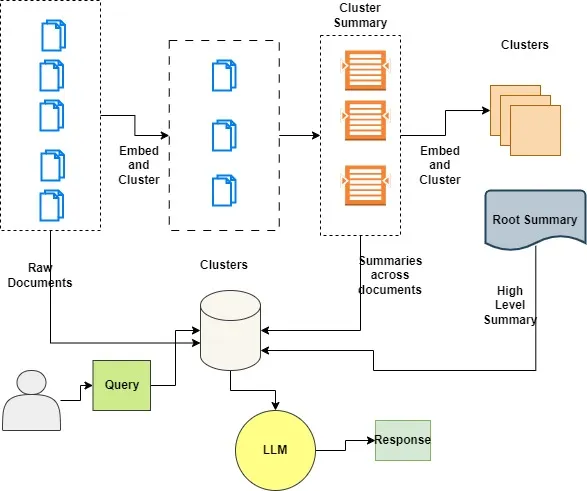

在传统的 RAG 中,我们通常依靠检索短的连续文本块来进行检索。但是,当我们处理的是长上下文文档时,我们就不能仅仅将文档分块并嵌入其中,或者仅仅使用上下文来填充所有文档。相反,我们希望为长上下文 LLms 找到一种好的最小化分块方法。这就是 RAPTOR 的用武之地。

什么是 RAPTOR?

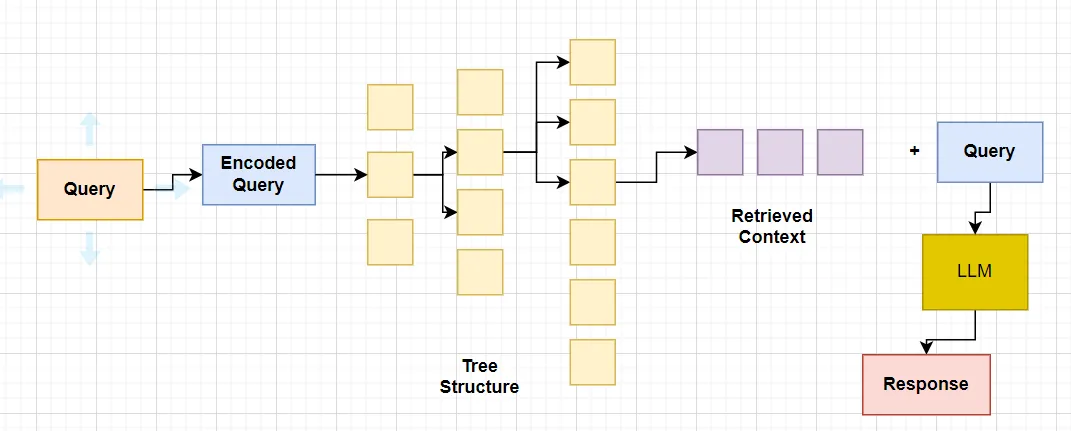

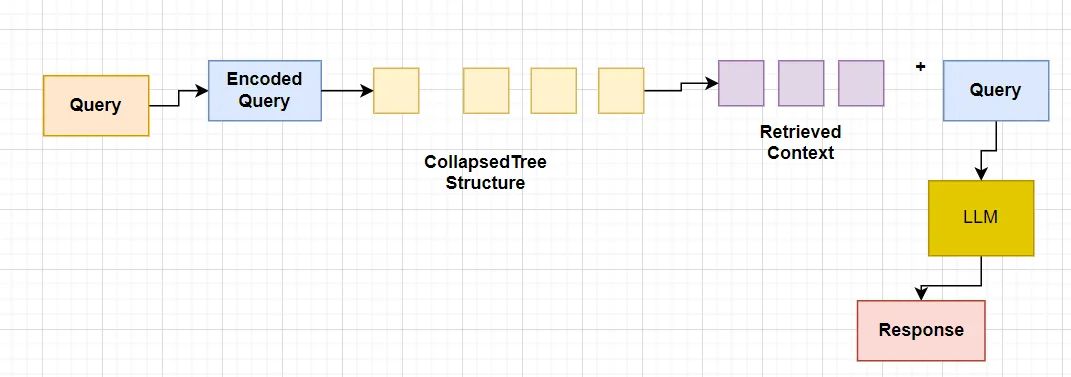

用于树状组织检索的递归抽象处理技术是一种全新且强大的索引和检索技术,可全面用于 LLM。它采用了一种自下而上的方法,通过对文本片段(块)进行聚类和归纳来形成一种分层树结构。

RAPTOR 论文提出了一种有趣的文档索引和检索方法:

- 树叶是一组起始文档。

- 树叶被嵌入并聚类。

- 然后将聚类归纳为更高层次(更抽象)的类似文档信息整合。

- 这个过程是递归进行的,形成一棵从原始文档(树叶)到更抽象摘要的 "树"。

我们可以在不同尺度上应用这种方法;树叶可以是:

- 单篇文档中的文本块(如论文所示)

- 完整文档(如下所示)

- 通过较长的上下文 LLM,可以在完整文档上执行此功能。

这种树状结构是 RAPTOR 功能的关键所在,因为它能捕捉文本的高层次和细节方面,尤其适用于复杂的主题查询和问答任务中的多步骤推理。

这一过程包括将文档分割成较短的文本(称为 "块"),然后使用嵌入模型将 "块 "嵌入。然后使用聚类算法对这些嵌入进行聚类。聚类创建完成后,使用 LLM 对与每个聚类相关的文本进行摘要。

生成的摘要形成树状节点,较高层次的节点提供更抽象的摘要。

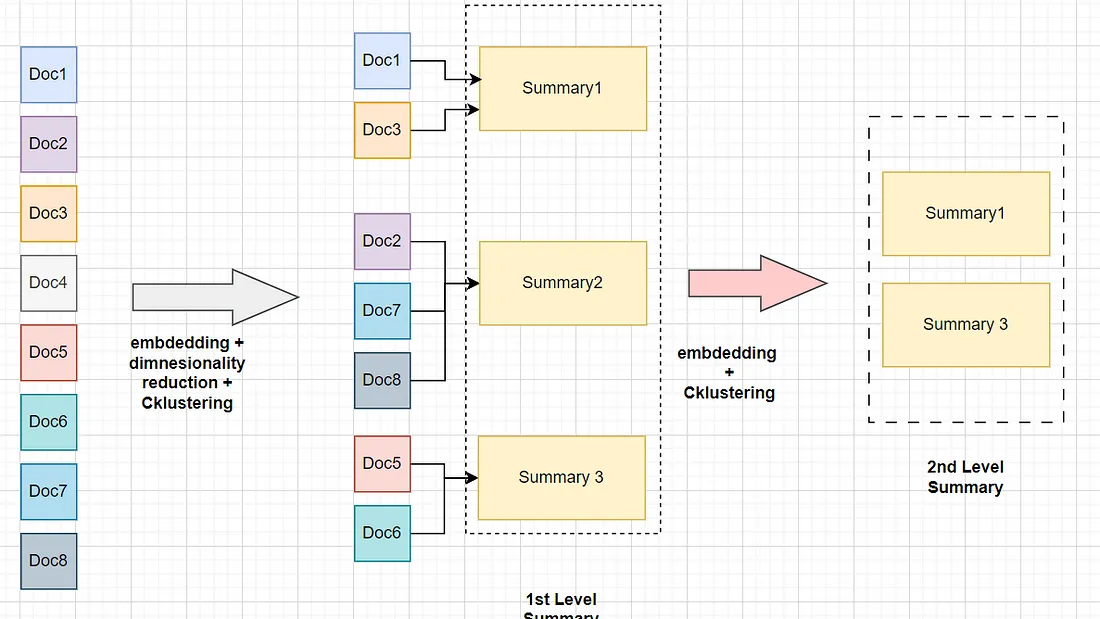

假设我们有 8 个属于一本大型手册的文档块。我们并不只是嵌入文档块并对其进行检索,而是先嵌入文档块,然后对其进行降维处理,因为对所有维度(OpenAI 嵌入模型为 1536 维,普通开源小型嵌入模型为 384 维)生成聚类的计算成本很高。

然后,使用聚类算法对缩减的维度进行聚类。然后,我们提取属于每个聚类的所有块,并总结每个聚类的上下文。生成的摘要进行增益嵌入和聚类,重复这一过程,直到达到模型的标记限制(上下文窗口)。

简而言之,RAPTOR 背后的直觉如下:

- 对相似文档进行聚类和摘要。

- 从相关文档中捕捉信息并汇总。

- 为需要较少上下文内容的问题提供帮助。

代码实现

安装所需的库。

!pip install -U langchain umap-learn scikit-learn langchain_community tiktoken langchain-openai langchainhub chromadb

!CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install -qU llama-cpp-python

import locale

def getpreferredencoding(do_setlocale = True):

return "UTF-8"

locale.getpreferredencoding = getpreferredencoding

下载所需的 Zephyr 模型参数文件

!wget "https://huggingface.co/TheBloke/zephyr-7B-beta-GGUF/resolve/main/zephyr-7b-beta.Q4_K_M.gguf"实例化 LLM

from langchain_community.llms import LlamaCpp

from langchain_core.callbacks import CallbackManager, StreamingStdOutCallbackHandler

from langchain_core.prompts import PromptTemplate

#

n_gpu_layers = -1 # The number of layers to put on the GPU. The rest will be on the CPU. If you don't know how many layers there are, you can use -1 to move all to GPU.

n_batch = 512 # Should be between 1 and n_ctx, consider the amount of VRAM in your GPU.

#

# Callbacks support token-wise streaming

callback_manager = CallbackManager([StreamingStdOutCallbackHandler()])

#

# Make sure the model path is correct for your system!

model = LlamaCpp(

model_path="/content/zephyr-7b-beta.Q4_K_M.gguf",

n_gpu_layers=n_gpu_layers,

n_batch=n_batch,

temperature=0.75,

max_tokens=1000,

top_p=1,

n_ctx=35000,

callback_manager=callback_manager,

verbose=True, # Verbose is required to pass to the callback manager

)

实例化嵌入模型。

from langchain.vectorstores import Chroma

from langchain_community.embeddings import HuggingFaceEmbeddings

from langchain_community.vectorstores.utils import DistanceStrategy

#

EMBEDDING_MODEL_NAME = "thenlper/gte-small"

embd = HuggingFaceEmbeddings(

model_name=EMBEDDING_MODEL_NAME,

multi_process=True,

model_kwargs={"device": "cuda"},

encode_kwargs={"normalize_embeddings": True}, # set True for cosine similarity

)

加载数据

这里我们使用 LangChain 的 LCEL 文档作为输入数据

import matplotlib.pyplot as plt

import tiktoken

from bs4 import BeautifulSoup as Soup

from langchain_community.document_loaders.recursive_url_loader import RecursiveUrlLoader

## Helper Fuction to count the number of Tokensin each text

def num_tokens_from_string(string: str, encoding_name: str) -> int:

"""Returns the number of tokens in a text string."""

encoding = tiktoken.get_encoding(encoding_name)

num_tokens = len(encoding.encode(string))

return num_tokens

#

# LCEL docs

url = "https://python.langchain.com/docs/expression_language/"

loader = RecursiveUrlLoader(

url=url, max_depth=20, extractor=lambda x: Soup(x, "html.parser").text

)

docs = loader.load()

# LCEL w/ PydanticOutputParser (outside the primary LCEL docs)

url = "https://python.langchain.com/docs/modules/model_io/output_parsers/quick_start"

loader = RecursiveUrlLoader(

url=url, max_depth=1, extractor=lambda x: Soup(x, "html.parser").text

)

docs_pydantic = loader.load()

# LCEL w/ Self Query (outside the primary LCEL docs)

url = "https://python.langchain.com/docs/modules/data_connection/retrievers/self_query/"

loader = RecursiveUrlLoader(

url=url, max_depth=1, extractor=lambda x: Soup(x, "html.parser").text

)

docs_sq = loader.load()

# Doc texts

docs.extend([*docs_pydantic, *docs_sq])

docs_texts = [d.page_content for d in docs]

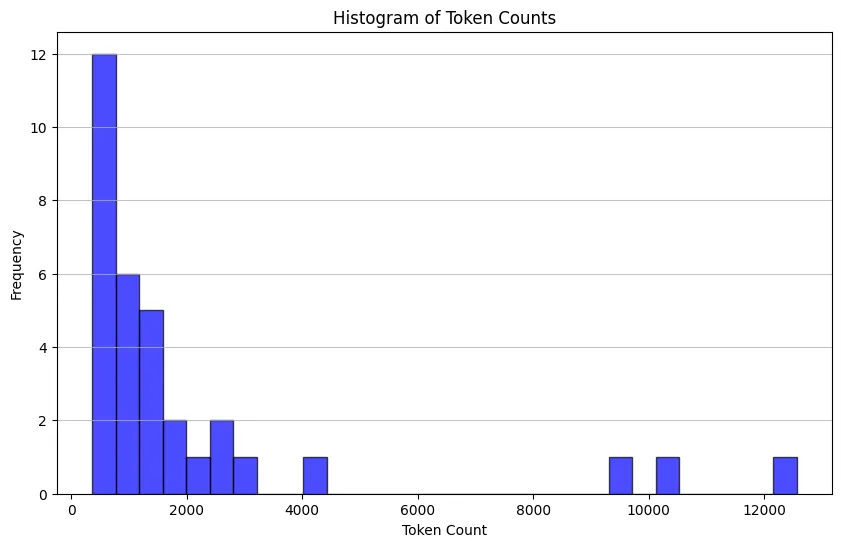

通过计算每份文档的标记数来查看原始文档有多大,并使用直方图进行直观显示

counts = [num_tokens_from_string(d, "cl100k_base") for d in docs_texts]

# Plotting the histogram of token counts

plt.figure(figsize=(10, 6))

plt.hist(counts, bins=30, color="blue", edgecolor="black", alpha=0.7)

plt.title("Histogram of Token Counts")

plt.xlabel("Token Count")

plt.ylabel("Frequency")

plt.grid(axis="y", alpha=0.75)

# Display the histogram

plt.show()

检查所有文件是否都在我们文件的上下文窗口内。

# Doc texts concat

d_sorted = sorted(docs, key=lambda x: x.metadata["source"])

d_reversed = list(reversed(d_sorted))

concatenated_content = "\n\n\n --- \n\n\n".join(

[doc.page_content for doc in d_reversed]

)

print(

"Num tokens in all context: %s"

% num_tokens_from_string(concatenated_content, "cl100k_base")

)

#

# Response

Num tokens in all context: 69108

将文件分块,以适应我们的 LLM 的上下文窗口。

# Doc texts split

from langchain_text_splitters import RecursiveCharacterTextSplitter

chunk_size_tok = 1000

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=chunk_size_tok, chunk_overlap=0

)

texts_split = text_splitter.split_text(concatenated_content)

#

print(f"Number of text splits generated: {len(texts_split)}")

#

# Response

Number of text splits generated: 142

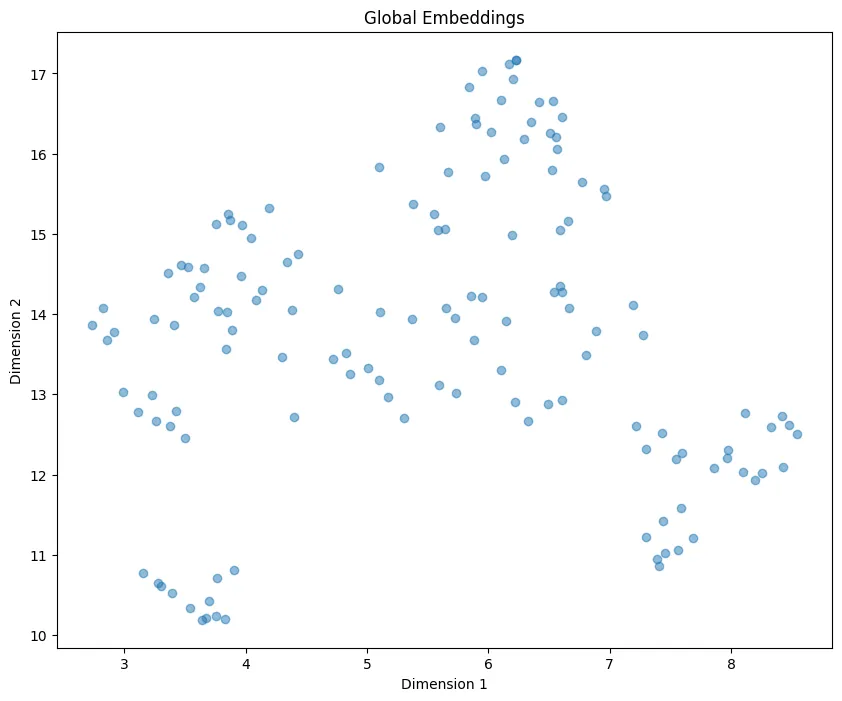

生成全局嵌入列表。

它包含每个语块的语义嵌入

global_embeddings = [embd.embed_query(txt) for txt in texts_split]

print(len(global_embeddings[0])

###########

384

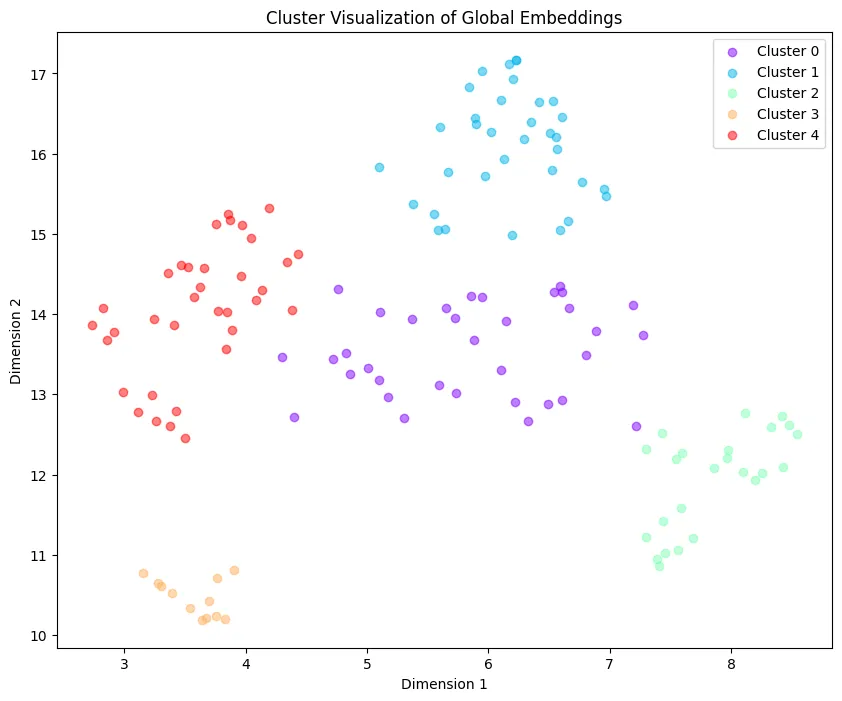

将维度从 384 降为 2,生成一个缩小的聚类,并对嵌入进行可视化处理。

import matplotlib.pyplot as plt

from typing import Optional

import numpy as np

import umap

def reduce_cluster_embeddings(

embeddings: np.ndarray,

dim: int,

n_neighbors: Optional[int] = None,

metric: str = "cosine",

) -> np.ndarray:

if n_neighbors is None:

n_neighbors = int((len(embeddings) - 1) ** 0.5)

return umap.UMAP(

n_neighbors=n_neighbors, n_components=dim, metric=metric

).fit_transform(embeddings)

dim = 2

global_embeddings_reduced = reduce_cluster_embeddings(global_embeddings, dim)

print(global_embeddings_reduced[0])

#

plt.figure(figsize=(10, 8))

plt.scatter(global_embeddings_reduced[:, 0], global_embeddings_reduced[:, 1], alpha=0.5)

plt.title("Global Embeddings")

plt.xlabel("Dimension 1")

plt.ylabel("Dimension 2")

plt.show()

树形结构

树状结构中的聚类方法包括一些有趣的想法。

GMM(高斯混杂模型)

- 建立数据点在不同聚类中的分布模型

- 通过评估模型的贝叶斯信息准则(BIC)来优化聚类的数量

UMAP(统一曲面逼近和投影)

- 支持聚类

- 降低高维数据的维度

- UMAP 有助于根据数据点的相似性突出数据点的自然分组

局部和全局聚类

- 用于分析不同尺度的数据

- 有效捕捉数据中的细粒度和更广泛的模式

阈值分析

- 应用于 GMM,以确定聚类成员资格

- 基于概率分布(将数据点分配到 ≥ 1 个聚类中)

import matplotlib.pyplot as plt

import numpy as np

from sklearn.mixture import GaussianMixture

def get_optimal_clusters(embeddings: np.ndarray, max_clusters: int = 50, random_state: int = 1234):

max_clusters = min(max_clusters, len(embeddings))

bics = [GaussianMixture(n_components=n, random_state=random_state).fit(embeddings).bic(embeddings)

for n in range(1, max_clusters)]

return np.argmin(bics) + 1

def gmm_clustering(embeddings: np.ndarray, threshold: float, random_state: int = 0):

n_clusters = get_optimal_clusters(embeddings)

gm = GaussianMixture(n_components=n_clusters, random_state=random_state).fit(embeddings)

probs = gm.predict_proba(embeddings)

labels = [np.where(prob > threshold)[0] for prob in probs]

return labels, n_clusters

#

labels, _ = gmm_clustering(global_embeddings_reduced, threshold=0.5)

plot_labels = np.array([label[0] if len(label) > 0 else -1 for label in labels])

plt.figure(figsize=(10, 8))

unique_labels = np.unique(plot_labels)

colors = plt.cm.rainbow(np.linspace(0, 1, len(unique_labels)))

for label, color in zip(unique_labels, colors):

mask = plot_labels == label

plt.scatter(global_embeddings_reduced[mask, 0], global_embeddings_reduced[mask, 1], color=color, label=f'Cluster {label}', alpha=0.5)

plt.title("Cluster Visualization of Global Embeddings")

plt.xlabel("Dimension 1")

plt.ylabel("Dimension 2")

plt.legend()

plt.show()

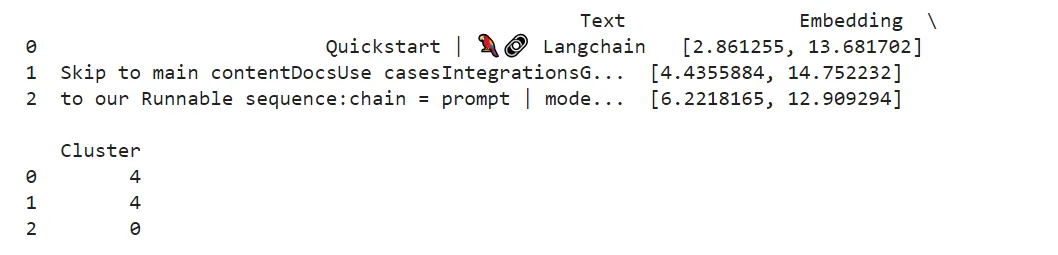

创建一个数据框,检查与每个群组相关的文本。

import pandas as pd

simple_labels = [label[0] if len(label) > 0 else -1 for label in labels]

df = pd.DataFrame({

'Text': texts_split,

'Embedding': list(global_embeddings_reduced),

'Cluster': simple_labels

})

print(df.head(3))

def format_cluster_texts(df):

clustered_texts = {}

for cluster in df['Cluster'].unique():

cluster_texts = df[df['Cluster'] == cluster]['Text'].tolist()

clustered_texts[cluster] = " --- ".join(cluster_texts)

return clustered_texts

#

clustered_texts = format_cluster_texts(df)

#

clustered_texts

####################################################################

# Response

{4: 'Quickstart | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookLangChain Expression Language (LCEL)ModulesModel I/OModel I/OQuickstartConceptsPromptsChat ModelsLLMsOutput ParsersQuickstartCustom Output ParsersTypesRetrievalAgentsChainsMoreLangServeLangSmithLangGraphModulesModel I/OOutput ParsersQuickstartOn this pageQuickstartLanguage models output text. But many times you may want to get more\nstructured information than just text back. This is where output parsers\ncome in.Output parsers are classes that help structure language model responses.\nThere are two main methods an output parser must implement:“Get format instructions”: A method which returns a string\ncontaining instructions for how the output of a language model\nshould be formatted.“Parse”: A method which takes in a string (assumed to be the\nresponse from a language model) and parses it into some structure.And then one optional one:“Parse with prompt”: A method which takes in a string (assumed to be\nthe response from a language model) and a prompt (assumed to be the\nprompt that generated such a response) and parses it into some\nstructure. The prompt is largely provided in the event the\nOutputParser wants to retry or fix the output in some way, and needs\ninformation from the prompt to do so.Get started\u200bBelow we go over the main type of output parser, the\nPydanticOutputParser.from langchain.output_parsers import PydanticOutputParserfrom langchain.prompts import PromptTemplatefrom langchain_core.pydantic_v1 import BaseModel, Field, validatorfrom langchain_openai import OpenAImodel = OpenAI(model_name="gpt-3.5-turbo-instruct", temperature=0.0)# Define your desired data structure.class Joke(BaseModel): setup: str = Field(description="question to set up a joke") punchline: str = Field(description="answer to resolve the joke") # You can add custom validation logic easily with Pydantic. @validator("setup") def question_ends_with_question_mark(cls, field): if field[-1] != "?": raise ValueError("Badly formed question!") return field# Set up a parser + inject instructions into the prompt template.parser = PydanticOutputParser(pydantic_object=Joke)prompt = PromptTemplate( template="Answer the user query.\\n{format_instructions}\\n{query}\\n", input_variables=["query"], partial_variables={"format_instructions": parser.get_format_instructions()},)# And a query intended to prompt a language model to populate the data structure.prompt_and_model = prompt | modeloutput = prompt_and_model.invoke({"query": "Tell me a joke."})parser.invoke(output)Joke(setup=\'Why did the chicken cross the road?\', punchline=\'To get to the other side!\')LCEL\u200bOutput parsers implement the Runnable\ninterface, the basic building\nblock of the LangChain Expression Language\n(LCEL). This means they support invoke,\nainvoke, stream, astream, batch, abatch, astream_log calls.Output parsers accept a string or BaseMessage as input and can return\nan arbitrary type.parser.invoke(output)Joke(setup=\'Why did the chicken cross the road?\', punchline=\'To get to the other side!\')Instead of manually invoking the parser, we also could’ve just added it --- --- \n\n\n\n\n\n\n\nSelf-querying | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookLangChain Expression Language (LCEL)ModulesModel I/ORetrievalDocument loadersText SplittersRetrievalText embedding modelsVector storesRetrieversVector store-backed retrieverMultiQueryRetrieverContextual compressionEnsemble RetrieverLong-Context ReorderMultiVector RetrieverParent Document RetrieverSelf-queryingTime-weighted vector store retrieverIndexingAgentsChainsMoreLangServeLangSmithLangGraphModulesRetrievalRetrieversSelf-queryingOn this pageSelf-queryingHead to Integrations for\ndocumentation on vector stores with built-in support for self-querying.A self-querying retriever is one that, as the name suggests, has the\nability to query itself. Specifically, given any natural language query,\nthe retriever uses a query-constructing LLM chain to write a structured\nquery and then applies that structured query to its underlying\nVectorStore. This allows the retriever to not only use the user-input\nquery for semantic similarity comparison with the contents of stored\ndocuments but to also extract filters from the user query on the\nmetadata of stored documents and to execute those filters.Get started\u200bFor demonstration purposes we’ll use a Chroma vector store. We’ve\ncreated a small demo set of documents that contain summaries of movies.Note: The self-query retriever requires you to have lark package\ninstalled.%pip install --upgrade --quiet lark chromadbfrom langchain_community.vectorstores import Chromafrom langchain_core.documents import Documentfrom langchain_openai import OpenAIEmbeddingsdocs = [ Document( page_content="A bunch of scientists bring back dinosaurs and mayhem breaks loose", metadata={"year": 1993, "rating": 7.7, "genre": "science fiction"}, ), Document( page_content="Leo DiCaprio gets lost in a dream within a dream within a dream within a ...", metadata={"year": 2010, "director": "Christopher Nolan", "rating": 8.2}, ), Document( page_content="A psychologist / detective gets lost in a series of dreams within dreams within dreams and Inception reused the idea", metadata={"year": 2006, "director": "Satoshi Kon", "rating": 8.6}, ), Document( page_content="A bunch of normal-sized women are supremely wholesome and some men pine after them", metadata={"year": 2019, "director": "Greta Gerwig", "rating": 8.3}, ), Document( page_content="Toys come alive and have a blast doing so", metadata={"year": 1995, "genre": "animated"}, ), Document( page_content="Three men walk into the Zone, three men walk out of the Zone", metadata={ "year": 1979, "director": "Andrei Tarkovsky", "genre": "thriller", "rating": 9.9, }, ),]vectorstore = Chroma.from_documents(docs, OpenAIEmbeddings())Creating our self-querying retriever\u200bNow we can instantiate our retriever. To do this we’ll need to provide\nsome information upfront about the metadata fields that our documents --- support and a short description of the document contents.from langchain.chains.query_constructor.base import AttributeInfofrom langchain.retrievers.self_query.base import SelfQueryRetrieverfrom langchain_openai import ChatOpenAImetadata_field_info = [ AttributeInfo( name="genre", description="The genre of the movie. One of [\'science fiction\', \'comedy\', \'drama\', \'thriller\', \'romance\', \'action\', \'animated\']", type="string", ), AttributeInfo( name="year", description="The year the movie was released", type="integer", ), AttributeInfo( name="director", description="The name of the movie director", type="string", ), AttributeInfo( name="rating", description="A 1-10 rating for the movie", type="float" ),]document_content_description = "Brief summary of a movie"llm = ChatOpenAI(temperature=0)retriever = SelfQueryRetriever.from_llm( llm, vectorstore, document_content_description, metadata_field_info,)Testing it out\u200bAnd now we can actually try using our retriever!# This example only specifies a filterretriever.invoke("I want to watch a movie rated higher than 8.5")[Document(page_content=\'Three men walk into the Zone, three men walk out of the Zone\', metadata={\'director\': \'Andrei Tarkovsky\', \'genre\': \'thriller\', \'rating\': 9.9, \'year\': 1979}), Document(page_content=\'A psychologist / detective gets lost in a series of dreams within dreams within dreams and Inception reused the idea\', metadata={\'director\': \'Satoshi Kon\', \'rating\': 8.6, \'year\': 2006})]# This example specifies a query and a filterretriever.invoke("Has Greta Gerwig directed any movies about women")[Document(page_content=\'A bunch of normal-sized women are supremely wholesome and some men pine after them\', metadata={\'director\': \'Greta Gerwig\', \'rating\': 8.3, \'year\': 2019})]# This example specifies a composite filterretriever.invoke("What\'s a highly rated (above 8.5) science fiction film?")[Document(page_content=\'A psychologist / detective gets lost in a series of dreams within dreams within dreams and Inception reused the idea\', metadata={\'director\': \'Satoshi Kon\', \'rating\': 8.6, \'year\': 2006}), Document(page_content=\'Three men walk into the Zone, three men walk out of the Zone\', metadata={\'director\': \'Andrei Tarkovsky\', \'genre\': \'thriller\', \'rating\': 9.9, \'year\': 1979})]# This example specifies a query and composite filterretriever.invoke( "What\'s a movie after 1990 but before 2005 that\'s all about toys, and preferably is animated")[Document(page_content=\'Toys come alive and have a blast doing so\', metadata={\'genre\': \'animated\', \'year\': 1995})]Filter k\u200bWe can also use the self query retriever to specify k: the number of\ndocuments to fetch.We can do this by passing enable_limit=True to the constructor.retriever = SelfQueryRetriever.from_llm( llm, vectorstore, document_content_description, metadata_field_info, enable_limit=True,)# This example only specifies a relevant queryretriever.invoke("What are two movies about dinosaurs")[Document(page_content=\'A bunch of scientists bring back dinosaurs and mayhem breaks loose\', metadata={\'genre\': \'science fiction\', \'rating\': 7.7, \'year\': 1993}), Document(page_content=\'Toys come alive and have a blast doing so\', metadata={\'genre\': \'animated\', \'year\': 1995})]Constructing from scratch with LCEL\u200bTo see what’s going on under the hood, and to have more custom control, --- we can reconstruct our retriever from scratch.First, we need to create a query-construction chain. This chain will\ntake a user query and generated a StructuredQuery object which\ncaptures the filters specified by the user. We provide some helper\nfunctions for creating a prompt and output parser. These have a number --- of tunable params that we’ll ignore here for simplicity.from langchain.chains.query_constructor.base import ( StructuredQueryOutputParser, get_query_constructor_prompt,)prompt = get_query_constructor_prompt( document_content_description, metadata_field_info,)output_parser = StructuredQueryOutputParser.from_components()query_constructor = prompt | llm | output_parserLet’s look at our prompt:print(prompt.format(query="dummy question"))Your goal is to structure the user\'s query to match the request schema provided below.<< Structured Request Schema >>When responding use a markdown code snippet with a JSON object formatted in the following schema:```json{ "query": string \\ text string to compare to document contents "filter": string \\ logical condition statement for filtering documents}```The query string should contain only text that is expected to match the contents of documents. Any conditions in the filter should not be mentioned in the query as well.A logical condition statement is composed of one or more comparison and logical operation statements.A comparison statement takes the form: `comp(attr, val)`:- `comp` (eq | ne | gt | gte | lt | lte | contain | like | in | nin): comparator- `attr` (string): name of attribute to apply the comparison to- `val` (string): is the comparison valueA logical operation statement takes the form `op(statement1, statement2, ...)`:- `op` (and | or | not): logical operator- `statement1`, `statement2`, ... (comparison statements or logical operation statements): one or more statements to apply the operation toMake sure that you only use the comparators and logical operators listed above and no others.Make sure that filters only refer to attributes that exist in the data source.Make sure that filters only use the attributed names with its function names if there are functions applied on them.Make sure that filters only use format `YYYY-MM-DD` when handling date data typed values.Make sure that filters take into account the descriptions of attributes and only make comparisons that are feasible given the type of data being stored.Make sure that filters are only used as needed. If there are no filters that should be applied return "NO_FILTER" for the filter value.<< Example 1. >>Data Source:```json{ "content": "Lyrics of a song", "attributes": { "artist": { "type": "string", "description": "Name of the song artist" }, "length": { "type": "integer", "description": "Length of the song in seconds" }, "genre": { "type": "string", "description": "The song genre, one of "pop", "rock" or "rap"" } }}```User Query:What are songs by Taylor Swift or Katy Perry about teenage romance under 3 minutes long in the dance pop genreStructured Request:```json{ "query": "teenager love", "filter": "and(or(eq(\\"artist\\", \\"Taylor Swift\\"), eq(\\"artist\\", \\"Katy Perry\\")), lt(\\"length\\", 180), eq(\\"genre\\", \\"pop\\"))"}```<< Example 2. >>Data Source:```json{ "content": "Lyrics of a song", "attributes": { "artist": { "type": "string", "description": "Name of the song artist" }, "length": { "type": "integer", "description": "Length of the song in seconds" }, "genre": --- { "type": "string", "description": "The song genre, one of "pop", "rock" or "rap"" } }}```User Query:What are songs that were not published on SpotifyStructured Request:```json{ "query": "", "filter": "NO_FILTER"}```<< Example 3. >>Data Source:```json{ "content": "Brief summary of a movie", "attributes": { "genre": { "description": "The genre of the movie. One of [\'science fiction\', \'comedy\', \'drama\', \'thriller\', \'romance\', \'action\', \'animated\']", "type": "string" }, "year": { "description": "The year the movie was released", "type": "integer" }, "director": { "description": "The name of the movie director", "type": "string" }, "rating": { "description": "A 1-10 rating for the movie", "type": "float" }}}```User Query:dummy questionStructured Request:And what our full chain produces:query_constructor.invoke( { "query": "What are some sci-fi movies from the 90\'s directed by Luc Besson about taxi drivers" })StructuredQuery(query=\'taxi driver\', filter=Operation(operator=<Operator.AND: \'and\'>, arguments=[Comparison(comparator=<Comparator.EQ: \'eq\'>, attribute=\'genre\', value=\'science fiction\'), Operation(operator=<Operator.AND: \'and\'>, arguments=[Comparison(comparator=<Comparator.GTE: \'gte\'>, attribute=\'year\', value=1990), Comparison(comparator=<Comparator.LT: \'lt\'>, attribute=\'year\', value=2000)]), Comparison(comparator=<Comparator.EQ: \'eq\'>, attribute=\'director\', value=\'Luc Besson\')]), limit=None)The query constructor is the key element of the self-query retriever. To --- make a great retrieval system you’ll need to make sure your query\nconstructor works well. Often this requires adjusting the prompt, the\nexamples in the prompt, the attribute descriptions, etc. For an example\nthat walks through refining a query constructor on some hotel inventory\ndata, check out this\ncookbook.The next key element is the structured query translator. This is the\nobject responsible for translating the generic StructuredQuery object\ninto a metadata filter in the syntax of the vector store you’re using.\nLangChain comes with a number of built-in translators. To see them all\nhead to the Integrations\nsection.from langchain.retrievers.self_query.chroma import ChromaTranslatorretriever = SelfQueryRetriever( query_constructor=query_constructor, vectorstore=vectorstore, structured_query_translator=ChromaTranslator(),)retriever.invoke( "What\'s a movie after 1990 but before 2005 that\'s all about toys, and preferably is animated")[Document(page_content=\'Toys come alive and have a blast doing so\', metadata={\'genre\': \'animated\', \'year\': 1995})]Help us out by providing feedback on this documentation page:PreviousParent Document RetrieverNextTime-weighted vector store retrieverGet startedCreating our self-querying retrieverTesting it outFilter kConstructing from scratch with LCELCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc. --- --- \n\n\n\n\n\n\n\nWhy use LCEL | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageWhy use LCELOn this pageWhy use LCELWe recommend reading the LCEL Get\nstarted section first.LCEL makes it easy to build complex chains from basic components. It\ndoes this by providing: 1. A unified interface: Every LCEL object\nimplements the Runnable interface, which defines a common set of\ninvocation methods (invoke, batch, stream, ainvoke, …). This\nmakes it possible for chains of LCEL objects to also automatically\nsupport these invocations. That is, every chain of LCEL objects is\nitself an LCEL object. 2. Composition primitives: LCEL provides a\nnumber of primitives that make it easy to compose chains, parallelize\ncomponents, add fallbacks, dynamically configure chain internal, and\nmore.To better understand the value of LCEL, it’s helpful to see it in action\nand think about how we might recreate similar functionality without it.\nIn this walkthrough we’ll do just that with our basic\nexample from the\nget started section. We’ll take our simple prompt + model chain, which\nunder the hood already defines a lot of functionality, and see what it\nwould take to recreate all of it.%pip install --upgrade --quiet langchain-core langchain-openai langchain-anthropicInvoke\u200bIn the simplest case, we just want to pass in a topic string and get --- cream")LCEL\u200bEvery component has built-in integrations with LangSmith. If we set the --- LCEL Interface, which we’ve only\npartially covered here. - Exploring the\nHow-to section to learn about\nadditional composition primitives that LCEL provides. - Looking through\nthe Cookbook section to see LCEL\nin action for common use cases. A good next use case to look at would be\nRetrieval-augmented\ngeneration.Help us out by providing feedback on this documentation page:PreviousGet startedNextInterfaceInvokeStreamBatchAsyncLLM instead of chat modelDifferent model providerRuntime configurabilityLoggingFallbacksFull code comparisonNext stepsCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc. --- --- \n\n\n\n\n\n\n\nStreaming | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageStreamingOn this pageStreaming With LangChainStreaming is critical in making applications based on LLMs feel\nresponsive to end-users.Important LangChain primitives like LLMs, parsers, prompts, retrievers,\nand agents implement the LangChain Runnable\nInterface.This interface provides two general approaches to stream content:sync stream and async astream: a default implementation of\nstreaming that streams the final output from the chain.async astream_events and async astream_log: these provide a way\nto stream both intermediate steps and final output from the\nchain.Let’s take a look at both approaches, and try to understand how to use\nthem. 🥷Using Stream\u200bAll Runnable objects implement a sync method called stream and an\nasync variant called astream.These methods are designed to stream the final output in chunks,\nyielding each chunk as soon as it is available.Streaming is only possible if all steps in the program know how to\nprocess an input stream; i.e., process an input chunk one at a time,\nand yield a corresponding output chunk.The complexity of this processing can vary, from straightforward tasks\nlike emitting tokens produced by an LLM, to more challenging ones like\nstreaming parts of JSON results before the entire JSON is complete.The best place to start exploring streaming is with the single most\nimportant components in LLMs apps– the LLMs themselves!LLMs and Chat Models\u200bLarge language models and their chat variants are the primary bottleneck\nin LLM based apps. 🙊Large language models can take several seconds to generate a\ncomplete response to a query. This is far slower than the ~200-300\nms threshold at which an application feels responsive to an end user.The key strategy to make the application feel more responsive is to show\nintermediate progress; viz., to stream the output from the model token\nby token.We will show examples of streaming using the chat model from\nAnthropic. To use the model,\nyou will need to install the langchain-anthropic package. You can do\nthis with the following command:pip install -qU langchain-anthropic# Showing the example using anthropic, but you can use# your favorite chat model!from langchain_anthropic import ChatAnthropicmodel = ChatAnthropic()chunks = []async for chunk in model.astream("hello. tell me something about yourself"): chunks.append(chunk) print(chunk.content, end="|", flush=True) Hello|!| My| name| is| Claude|.| I|\'m| an| AI| assistant| created| by| An|throp|ic| to| be| helpful|,| harmless|,| and| honest|.||Let’s inspect one of the chunkschunks[0]AIMessageChunk(content=\' Hello\')We got back something called an AIMessageChunk. This chunk represents\na part of an AIMessage.Message chunks are additive by design – one can simply add them up to\nget the state of the response so far!chunks[0] + chunks[1] + chunks[2] + chunks[3] + chunks[4]AIMessageChunk(content=\' Hello! My name is\')Chains\u200bVirtually all LLM applications involve more steps than just a call to a\nlanguage model.Let’s build a simple chain using LangChain Expression Language\n(LCEL) that combines a prompt, model and a parser and verify that\nstreaming works.We will use StrOutputParser to parse the output from the model. This\nis a simple parser that extracts the content field from an\nAIMessageChunk, giving us the token returned by the model.tipLCEL is a declarative way to specify a “program” by chainining\ntogether different LangChain primitives. Chains created using LCEL\nbenefit from an automatic implementation of stream and astream --- --- \n\n\n\n\n\n\n\nInterface | 🦜️🔗 Langchain --- transforms this into a Python string, which is returned from the\ninvoke method.Next steps\u200bWe recommend reading our Why use LCEL\nsection next to see a side-by-side comparison of the code needed to\nproduce common functionality with and without LCEL.Help us out by providing feedback on this documentation page:PreviousLangChain Expression Language (LCEL)NextWhy use LCELBasic example: prompt + model + output parser1. Prompt2. Model3. Output parser4. Entire PipelineRAG Search ExampleNext stepsCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc. --- --- \n\n\n\n\n\n\n\nUsing tools | 🦜️🔗 Langchain\n\n\n\n\n\n\n\nSkip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookUsing toolsUsing toolsYou can use any Tools with Runnables easily.%pip install --upgrade --quiet langchain langchain-openai duckduckgo-searchfrom langchain.tools import DuckDuckGoSearchRunfrom langchain_core.output_parsers import StrOutputParserfrom langchain_core.prompts import ChatPromptTemplatefrom langchain_openai import ChatOpenAIsearch = DuckDuckGoSearchRun()template = """turn the following user input into a search query for a search engine:{input}"""prompt = ChatPromptTemplate.from_template(template)model = ChatOpenAI()chain = prompt | model | StrOutputParser() | searchchain.invoke({"input": "I\'d like to figure out what games are tonight"})\'What sports games are on TV today & tonight? Watch and stream live sports on TV today, tonight, tomorrow. Today\\\'s 2023 sports TV schedule includes football, basketball, baseball, hockey, motorsports, soccer and more. Watch on TV or stream online on ESPN, FOX, FS1, CBS, NBC, ABC, Peacock, Paramount+, fuboTV, local channels and many other networks. MLB Games Tonight: How to Watch on TV, Streaming & Odds - Thursday, September 7. Seattle Mariners\\\' Julio Rodriguez greets teammates in the dugout after scoring against the Oakland Athletics in a ... Circle - Country Music and Lifestyle. Live coverage of all the MLB action today is available to you, with the information provided below. The Brewers will look to pick up a road win at PNC Park against the Pirates on Wednesday at 12:35 PM ET. Check out the latest odds and with BetMGM Sportsbook. Use bonus code "GNPLAY" for special offers! MLB Games Tonight: How to Watch on TV, Streaming & Odds - Tuesday, September 5. Houston Astros\\\' Kyle Tucker runs after hitting a double during the fourth inning of a baseball game against the Los Angeles Angels, Sunday, Aug. 13, 2023, in Houston. (AP Photo/Eric Christian Smith) (APMedia) The Houston Astros versus the Texas Rangers is one of ... The second half of tonight\\\'s college football schedule still has some good games remaining to watch on your television.. We\\\'ve already seen an exciting one when Colorado upset TCU. And we saw some ...\'Help us out by providing feedback on this documentation page:PreviousManaging prompt sizeNextLangChain Expression Language (LCEL)CommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nQuerying a SQL DB | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookQuerying a SQL DBQuerying a SQL DBWe can replicate our SQLDatabaseChain with Runnables.%pip install --upgrade --quiet langchain langchain-openaifrom langchain_core.prompts import ChatPromptTemplatetemplate = """Based on the table schema below, write a SQL query that would answer the user\'s question:{schema}Question: {question}SQL Query:"""prompt = ChatPromptTemplate.from_template(template)from langchain_community.utilities import SQLDatabaseWe’ll need the Chinook sample DB for this example. There’s many places\nto download it from,\ne.g.\xa0https://database.guide/2-sample-databases-sqlite/db = SQLDatabase.from_uri("sqlite:///./Chinook.db")def get_schema(_): return db.get_table_info()def run_query(query): return db.run(query)from langchain_core.output_parsers import StrOutputParserfrom langchain_core.runnables import RunnablePassthroughfrom langchain_openai import ChatOpenAImodel = ChatOpenAI()sql_response = ( RunnablePassthrough.assign(schema=get_schema) | prompt | model.bind(stop=["\\nSQLResult:"]) | StrOutputParser())sql_response.invoke({"question": "How many employees are there?"})\'SELECT COUNT(*) FROM Employee\'template = """Based on the table schema below, question, sql query, and sql response, write a natural language response:{schema}Question: {question}SQL Query: {query}SQL Response: {response}"""prompt_response = ChatPromptTemplate.from_template(template)full_chain = ( RunnablePassthrough.assign(query=sql_response).assign( schema=get_schema, response=lambda x: db.run(x["query"]), ) | prompt_response | model)full_chain.invoke({"question": "How many employees are there?"})AIMessage(content=\'There are 8 employees.\', additional_kwargs={}, example=False)Help us out by providing feedback on this documentation page:PreviousMultiple chainsNextAgentsCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nRAG | 🦜️🔗 Langchain --- --- \n\n\n\n\n\n\n\nManaging prompt size | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookManaging prompt sizeManaging prompt sizeAgents dynamically call tools. The results of those tool calls are added\nback to the prompt, so that the agent can plan the next action.\nDepending on what tools are being used and how they’re being called, the\nagent prompt can easily grow larger than the model context window.With LCEL, it’s easy to add custom functionality for managing the size\nof prompts within your chain or agent. Let’s look at simple agent --- traceUnfortunately we run out of space in our model’s context window before\nwe the agent can get to the final answer. Now let’s add some prompt\nhandling logic. To keep things simple, if our messages have too many\ntokens we’ll start dropping the earliest AI, Function message pairs\n(this is the model tool invocation message and the subsequent tool --- traceHelp us out by providing feedback on this documentation page:PreviousAdding moderationNextUsing toolsCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc. --- --- \n\n\n\n\n\n\n\nPrompt + LLM | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookPrompt + LLMOn this pagePrompt + LLMThe most common and valuable composition is taking:PromptTemplate / ChatPromptTemplate -> LLM / ChatModel ->\nOutputParserAlmost any other chains you build will use this building block.PromptTemplate + LLM\u200bThe simplest composition is just combining a prompt and model to create\na chain that takes user input, adds it to a prompt, passes it to a\nmodel, and returns the raw model output.Note, you can mix and match PromptTemplate/ChatPromptTemplates and\nLLMs/ChatModels as you like here.%pip install –upgrade –quiet langchain langchain-openaifrom langchain_core.prompts import ChatPromptTemplatefrom langchain_openai import ChatOpenAIprompt = ChatPromptTemplate.from_template("tell me a joke about {foo}")model = ChatOpenAI()chain = prompt | modelchain.invoke({"foo": "bears"})AIMessage(content="Why don\'t bears wear shoes?\\n\\nBecause they have bear feet!", additional_kwargs={}, example=False)Often times we want to attach kwargs that’ll be passed to each model\ncall. Here are a few examples of that:Attaching Stop Sequences\u200bchain = prompt | model.bind(stop=["\\n"])chain.invoke({"foo": "bears"})AIMessage(content=\'Why did the bear never wear shoes?\', additional_kwargs={}, example=False)Attaching Function Call information\u200bfunctions = [ { "name": "joke", "description": "A joke", "parameters": { "type": "object", "properties": { "setup": {"type": "string", "description": "The setup for the joke"}, "punchline": { "type": "string", "description": "The punchline for the joke", }, }, "required": ["setup", "punchline"], }, }]chain = prompt | model.bind(function_call={"name": "joke"}, functions=functions)chain.invoke({"foo": "bears"}, config={})AIMessage(content=\'\', additional_kwargs={\'function_call\': {\'name\': \'joke\', \'arguments\': \'{\\n "setup": "Why don\\\'t bears wear shoes?",\\n "punchline": "Because they have bear feet!"\\n}\'}}, example=False)PromptTemplate + LLM + OutputParser\u200bWe can also add in an output parser to easily transform the raw\nLLM/ChatModel output into a more workable formatfrom langchain_core.output_parsers import StrOutputParserchain = prompt | model | StrOutputParser()Notice that this now returns a string - a much more workable format for\ndownstream taskschain.invoke({"foo": "bears"})"Why don\'t bears wear shoes?\\n\\nBecause they have bear feet!"Functions Output Parser\u200bWhen you specify the function to return, you may just want to parse that --- --- \n\n\n\n\n\n\n\nMultiple chains | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookMultiple chainsOn this pageMultiple chainsRunnables can easily be used to string together multiple Chains%pip install --upgrade --quiet langchain langchain-openaifrom operator import itemgetterfrom langchain_core.output_parsers import StrOutputParserfrom langchain_core.prompts import ChatPromptTemplatefrom langchain_openai import ChatOpenAIprompt1 = ChatPromptTemplate.from_template("what is the city {person} is from?")prompt2 = ChatPromptTemplate.from_template( "what country is the city {city} in? respond in {language}")model = ChatOpenAI()chain1 = prompt1 | model | StrOutputParser()chain2 = ( {"city": chain1, "language": itemgetter("language")} | prompt2 | model | StrOutputParser())chain2.invoke({"person": "obama", "language": "spanish"})\'El país donde se encuentra la ciudad de Honolulu, donde nació Barack Obama, el 44º Presidente de los Estados Unidos, es Estados Unidos. Honolulu se encuentra en la isla de Oahu, en el estado de Hawái.\'from langchain_core.runnables import RunnablePassthroughprompt1 = ChatPromptTemplate.from_template( "generate a {attribute} color. Return the name of the color and nothing else:")prompt2 = ChatPromptTemplate.from_template( "what is a fruit of color: {color}. Return the name of the fruit and nothing else:")prompt3 = ChatPromptTemplate.from_template( "what is a country with a flag that has the color: {color}. Return the name of the country and nothing else:")prompt4 = ChatPromptTemplate.from_template( "What is the color of {fruit} and the flag of {country}?")model_parser = model | StrOutputParser()color_generator = ( {"attribute": RunnablePassthrough()} | prompt1 | {"color": model_parser})color_to_fruit = prompt2 | model_parsercolor_to_country = prompt3 | model_parserquestion_generator = ( color_generator | {"fruit": color_to_fruit, "country": color_to_country} | prompt4)question_generator.invoke("warm")ChatPromptValue(messages=[HumanMessage(content=\'What is the color of strawberry and the flag of China?\', additional_kwargs={}, example=False)])prompt = question_generator.invoke("warm")model.invoke(prompt)AIMessage(content=\'The color of an apple is typically red or green. The flag of China is predominantly red with a large yellow star in the upper left corner and four smaller yellow stars surrounding it.\', additional_kwargs={}, example=False)Branching and Merging\u200bYou may want the output of one component to be processed by 2 or more\nother components.\nRunnableParallels\nlet you split or fork the chain so multiple components can process the\ninput in parallel. Later, other components can join or merge the results\nto synthesize a final response. This type of chain creates a computation --- --- \n\n\n\n\n\n\n\nAdding moderation | 🦜️🔗 Langchain\n\n\n\n\n\n\n\nSkip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookAdding moderationAdding moderationThis shows how to add in moderation (or other safeguards) around your\nLLM application.%pip install --upgrade --quiet langchain langchain-openaifrom langchain.chains import OpenAIModerationChainfrom langchain_core.prompts import ChatPromptTemplatefrom langchain_openai import OpenAImoderate = OpenAIModerationChain()model = OpenAI()prompt = ChatPromptTemplate.from_messages([("system", "repeat after me: {input}")])chain = prompt | modelchain.invoke({"input": "you are stupid"})\'\\n\\nYou are stupid.\'moderated_chain = chain | moderatemoderated_chain.invoke({"input": "you are stupid"}){\'input\': \'\\n\\nYou are stupid\', \'output\': "Text was found that violates OpenAI\'s content policy."}Help us out by providing feedback on this documentation page:PreviousAdding memoryNextManaging prompt sizeCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nAdding memory | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookAdding memoryAdding memoryThis shows how to add memory to an arbitrary chain. Right now, you can\nuse the memory classes but need to hook it up manually%pip install --upgrade --quiet langchain langchain-openaifrom operator import itemgetterfrom langchain.memory import ConversationBufferMemoryfrom langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholderfrom langchain_core.runnables import RunnableLambda, RunnablePassthroughfrom langchain_openai import ChatOpenAImodel = ChatOpenAI()prompt = ChatPromptTemplate.from_messages( [ ("system", "You are a helpful chatbot"), MessagesPlaceholder(variable_name="history"), ("human", "{input}"), ])memory = ConversationBufferMemory(return_messages=True)memory.load_memory_variables({}){\'history\': []}chain = ( RunnablePassthrough.assign( history=RunnableLambda(memory.load_memory_variables) | itemgetter("history") ) | prompt | model)inputs = {"input": "hi im bob"}response = chain.invoke(inputs)responseAIMessage(content=\'Hello Bob! How can I assist you today?\', additional_kwargs={}, example=False)memory.save_context(inputs, {"output": response.content})memory.load_memory_variables({}){\'history\': [HumanMessage(content=\'hi im bob\', additional_kwargs={}, example=False), AIMessage(content=\'Hello Bob! How can I assist you today?\', additional_kwargs={}, example=False)]}inputs = {"input": "whats my name"}response = chain.invoke(inputs)responseAIMessage(content=\'Your name is Bob.\', additional_kwargs={}, example=False)Help us out by providing feedback on this documentation page:PreviousRouting by semantic similarityNextAdding moderationCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nRouting by semantic similarity | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookRouting by semantic similarityRouting by semantic similarityWith LCEL you can easily add custom routing\nlogic\nto your chain to dynamically determine the chain logic based on user\ninput. All you need to do is define a function that given an input\nreturns a Runnable.One especially useful technique is to use embeddings to route a query to --- --- \n\n\n\n\n\n\n\nCode writing | 🦜️🔗 Langchain\n\n\n\n\n\n\n\nSkip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookCode writingCode writingExample of how to use LCEL to write Python code.%pip install --upgrade --quiet langchain-core langchain-experimental langchain-openaifrom langchain_core.output_parsers import StrOutputParserfrom langchain_core.prompts import ( ChatPromptTemplate,)from langchain_experimental.utilities import PythonREPLfrom langchain_openai import ChatOpenAItemplate = """Write some python code to solve the user\'s problem. Return only python code in Markdown format, e.g.:```python....```"""prompt = ChatPromptTemplate.from_messages([("system", template), ("human", "{input}")])model = ChatOpenAI()def _sanitize_output(text: str): _, after = text.split("```python") return after.split("```")[0]chain = prompt | model | StrOutputParser() | _sanitize_output | PythonREPL().runchain.invoke({"input": "whats 2 plus 2"})Python REPL can execute arbitrary code. Use with caution.\'4\\n\'Help us out by providing feedback on this documentation page:PreviousAgentsNextRouting by semantic similarityCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nAgents | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookAgentsAgentsYou can pass a Runnable into an agent. Make sure you have langchainhub\ninstalled: pip install langchainhubfrom langchain import hubfrom langchain.agents import AgentExecutor, toolfrom langchain.agents.output_parsers import XMLAgentOutputParserfrom langchain_community.chat_models import ChatAnthropicmodel = ChatAnthropic(model="claude-2")@tooldef search(query: str) -> str: """Search things about current events.""" return "32 degrees"tool_list = [search]# Get the prompt to use - you can modify this!prompt = hub.pull("hwchase17/xml-agent-convo")# Logic for going from intermediate steps to a string to pass into model# This is pretty tied to the promptdef convert_intermediate_steps(intermediate_steps): log = "" for action, observation in intermediate_steps: log += ( f"<tool>{action.tool}</tool><tool_input>{action.tool_input}" f"</tool_input><observation>{observation}</observation>" ) return log# Logic for converting tools to string to go in promptdef convert_tools(tools): return "\\n".join([f"{tool.name}: {tool.description}" for tool in tools])Building an agent from a runnable usually involves a few things:Data processing for the intermediate steps. These need to be\nrepresented in a way that the language model can recognize them.\nThis should be pretty tightly coupled to the instructions in the\npromptThe prompt itselfThe model, complete with stop tokens if neededThe output parser - should be in sync with how the prompt specifies\nthings to be formatted.agent = ( { "input": lambda x: x["input"], "agent_scratchpad": lambda x: convert_intermediate_steps( x["intermediate_steps"] ), } | prompt.partial(tools=convert_tools(tool_list)) | model.bind(stop=["</tool_input>", "</final_answer>"]) | XMLAgentOutputParser())agent_executor = AgentExecutor(agent=agent, tools=tool_list, verbose=True)agent_executor.invoke({"input": "whats the weather in New york?"})> Entering new AgentExecutor chain... <tool>search</tool><tool_input>weather in New York32 degrees <tool>search</tool><tool_input>weather in New York32 degrees <final_answer>The weather in New York is 32 degrees> Finished chain.{\'input\': \'whats the weather in New york?\', \'output\': \'The weather in New York is 32 degrees\'}Help us out by providing feedback on this documentation page:PreviousQuerying a SQL DBNextCode writingCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nCookbook | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookCookbookExample code for accomplishing common tasks with the LangChain Expression Language (LCEL). These examples show how to compose different Runnable (the core LCEL interface) components to achieve various tasks. If you\'re just getting acquainted with LCEL, the Prompt + LLM page is a good place to start.📄️ Prompt + LLMThe most common and valuable composition is taking:📄️ RAGLet’s look at adding in a retrieval step to a prompt and LLM, which adds📄️ Multiple chainsRunnables can easily be used to string together multiple Chains📄️ Querying a SQL DBWe can replicate our SQLDatabaseChain with Runnables.📄️ AgentsYou can pass a Runnable into an agent. Make sure you have langchainhub📄️ Code writingExample of how to use LCEL to write Python code.📄️ Routing by semantic similarityWith LCEL you can easily add [custom routing📄️ Adding memoryThis shows how to add memory to an arbitrary chain. Right now, you can📄️ Adding moderationThis shows how to add in moderation (or other safeguards) around your📄️ Managing prompt sizeAgents dynamically call tools. The results of those tool calls are added📄️ Using toolsYou can use any Tools with Runnables easily.Help us out by providing feedback on this documentation page:PreviousAdd message history (memory)NextPrompt + LLMCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nCookbook | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookPrompt + LLMRAGMultiple chainsQuerying a SQL DBAgentsCode writingRouting by semantic similarityAdding memoryAdding moderationManaging prompt sizeUsing toolsLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageCookbookCookbookExample code for accomplishing common tasks with the LangChain Expression Language (LCEL). These examples show how to compose different Runnable (the core LCEL interface) components to achieve various tasks. If you\'re just getting acquainted with LCEL, the Prompt + LLM page is a good place to start.📄️ Prompt + LLMThe most common and valuable composition is taking:📄️ RAGLet’s look at adding in a retrieval step to a prompt and LLM, which adds📄️ Multiple chainsRunnables can easily be used to string together multiple Chains📄️ Querying a SQL DBWe can replicate our SQLDatabaseChain with Runnables.📄️ AgentsYou can pass a Runnable into an agent. Make sure you have langchainhub📄️ Code writingExample of how to use LCEL to write Python code.📄️ Routing by semantic similarityWith LCEL you can easily add [custom routing📄️ Adding memoryThis shows how to add memory to an arbitrary chain. Right now, you can📄️ Adding moderationThis shows how to add in moderation (or other safeguards) around your📄️ Managing prompt sizeAgents dynamically call tools. The results of those tool calls are added📄️ Using toolsYou can use any Tools with Runnables easily.Help us out by providing feedback on this documentation page:PreviousAdd message history (memory)NextPrompt + LLMCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.\n\n\n\n\n\n\n --- \n\n\n\n\n\n\n\nLangChain Expression Language (LCEL) | 🦜️🔗 Langchain --- Skip to main contentDocsUse casesIntegrationsGuidesAPIMorePeopleVersioningChangelogContributingTemplatesCookbooksTutorialsYouTube🦜️🔗LangSmithLangSmith DocsLangServe GitHubTemplates GitHubTemplates HubLangChain HubJS/TS Docs💬SearchGet startedIntroductionInstallationQuickstartSecurityLangChain Expression LanguageGet startedWhy use LCELInterfaceStreamingHow toCookbookLangChain Expression Language (LCEL)ModulesModel I/ORetrievalAgentsChainsMoreLangServeLangSmithLangGraphLangChain Expression LanguageLangChain Expression Language (LCEL)LangChain Expression Language, or LCEL, is a declarative way to easily compose chains together.\nLCEL was designed from day 1 to support putting prototypes in production, with no code changes, from the simplest “prompt + LLM” chain to the most complex chains (we’ve seen folks successfully run LCEL chains with 100s of steps in production). To highlight a few of the reasons you might want to use LCEL:Streaming support\nWhen you build your chains with LCEL you get the best possible time-to-first-token (time elapsed until the first chunk of output comes out). For some chains this means eg. we stream tokens straight from an LLM to a streaming output parser, and you get back parsed, incremental chunks of output at the same rate as the LLM provider outputs the raw tokens.Async support\nAny chain built with LCEL can be called both with the synchronous API (eg. in your Jupyter notebook while prototyping) as well as with the asynchronous API (eg. in a LangServe server). This enables using the same code for prototypes and in production, with great performance, and the ability to handle many concurrent requests in the same server.Optimized parallel execution\nWhenever your LCEL chains have steps that can be executed in parallel (eg if you fetch documents from multiple retrievers) we automatically do it, both in the sync and the async interfaces, for the smallest possible latency.Retries and fallbacks\nConfigure retries and fallbacks for any part of your LCEL chain. This is a great way to make your chains more reliable at scale. We’re currently working on adding streaming support for retries/fallbacks, so you can get the added reliability without any latency cost.Access intermediate results\nFor more complex chains it’s often very useful to access the results of intermediate steps even before the final output is produced. This can be used to let end-users know something is happening, or even just to debug your chain. You can stream intermediate results, and it’s available on every LangServe server.Input and output schemas\nInput and output schemas give every LCEL chain Pydantic and JSONSchema schemas inferred from the structure of your chain. This can be used for validation of inputs and outputs, and is an integral part of LangServe.Seamless LangSmith tracing integration\nAs your chains get more and more complex, it becomes increasingly important to understand what exactly is happening at every step.\nWith LCEL, all steps are automatically logged to LangSmith for maximum observability and debuggability.Seamless LangServe deployment integration\nAny chain created with LCEL can be easily deployed using LangServe.Help us out by providing feedback on this documentation page:PreviousSecurityNextGet startedCommunityDiscordTwitterGitHubPythonJS/TSMoreHomepageBlogYouTubeCopyright © 2024 LangChain, Inc.',